The Part about Mister Cloud!

We've finally reached the part of this series where we're going to look specifically at implementing time sync in public cloud services. We'll start with AWS and then move on to Azure. If you've used one cloud but not the other, you might find a quick search in Microsoft's AWS to Azure services comparison helpful when we come to a product with which you're unfamiliar.

AWS

Services in scope

There are a lot of different services in AWS where time synchronisation is important, but the primary one where you'll need to configure things is AWS' virtual machine service, EC2. Given that it's also one of the most-used services in AWS, we'll spend a bit of time with it.

In addition to EC2, there's also:

- ECS/EC2, the Elastic Container Service where you manage the EC2 instances on which your containers run

- ECS/Fargate, the Elastic Container Service where every container runs inside its own Firecracker micro-VM

- EKS, AWS' Kubernetes-as-a-Service offering

- Elastic Beanstalk, a Platform-as-a-Service which supports container workloads

- And the 30 other ways to run a container on AWS

For the purposes of this discussion, we'll consider all the cases where you run EC2 instances (including ECS and EKS) as the same (because they are, from a time synchronisation perspective), and all the cases where you run containers based on Firecracker VMs (including Lambda and ECS/Fargate) as the same also.

NTP in AWS: initial configuration

NTP is primarily provided in

AWS

via a network endpoint on the local hypervisor, accessed at the fixed addresses

169.254.169.123 and fd00:ec2::123. If you've used AWS before, these should

look relatively familiar, because the instance metadata

endpoints

appear on 169.254.169.254 and fd00:ec2::254. What this means for NTP is that

the source is extremely close (network-wise), and thus has very low latency,

which is important for that NTP metric we looked at in the last post: delay.

Many pre-built AWS AMIs such as Amazon Linux and Ubuntu Linux come with chrony

or ntpd installed and this source configured by default. If you start up one

of these preconfigured images, you'll probably find that even before you get a

chance to log in, they'll have the time synced and be looking quite healthy.

Here's some output from a new instance I spun up in Sydney (ap-southeast-2). It

uses chrony, so we'll focus on that for now:

ubuntu@i-0a4f94949b0ae3854:~$ sudo -i

root@i-0a4f94949b0ae3854:~# uptime

06:57:41 up 1 min, 2 users, load average: 0.13, 0.05, 0.02

root@i-0a4f94949b0ae3854:~# chronyc -n sources

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* 169.254.169.123 3 4 177 13 -4651ns[-9215ns] +/- 901us

^- 2620:2d:4000:1::3f 2 6 17 61 +5872us[+5976us] +/- 130ms

^- 2620:2d:4000:1::41 2 6 17 60 +5504us[+5610us] +/- 135ms

^- 2620:2d:4000:1::40 2 6 17 61 +6273us[+6378us] +/- 130ms

^- 2400:8907::f03c:93ff:fe5b:8a26 2 6 17 63 -314us[ +413ms] +/- 30ms

^- 2401:c080:2000:2288:5400:4ff:fe45:cef1 2 6 17 63 -33us[ +320ns] +/- 39ms

And here's the default chrony config:

root@i-0a4f94949b0ae3854:~# grep '^[^#]' /etc/chrony/chrony.conf

confdir /etc/chrony/conf.d

pool ntp.ubuntu.com iburst maxsources 4

pool 0.ubuntu.pool.ntp.org iburst maxsources 1

pool 1.ubuntu.pool.ntp.org iburst maxsources 1

pool 2.ubuntu.pool.ntp.org iburst maxsources 2

sourcedir /run/chrony-dhcp

sourcedir /etc/chrony/sources.d

keyfile /etc/chrony/chrony.keys

driftfile /var/lib/chrony/chrony.drift

ntsdumpdir /var/lib/chrony

logdir /var/log/chrony

maxupdateskew 100.0

rtcsync

makestep 1 3

And a custom source file shipped by default in the Ubuntu AMI for AWS:

root@i-0a4f94949b0ae3854:~# grep '^[^#]' /etc/chrony/conf.d/*conf

server 169.254.169.123 prefer iburst minpoll 4 maxpoll 4

If we ask chrony how it thinks we're situated in terms of time accuracy, it

looks pretty good:

root@i-0a4f94949b0ae3854:~# chronyc -n tracking

Reference ID : A9FEA97B (169.254.169.123)

Stratum : 4

Ref time (UTC) : Thu Feb 22 07:10:11 2024

System time : 0.000000764 seconds slow of NTP time

Last offset : -0.000001313 seconds

RMS offset : 0.000012392 seconds

Frequency : 0.683 ppm fast

Residual freq : -0.001 ppm

Skew : 0.021 ppm

Root delay : 0.000327923 seconds

Root dispersion : 0.000467478 seconds

Update interval : 16.3 seconds

Leap status : Normal

If that number after "System time" is making you go cross-eyed, allow me to assist: that's six zeros. So we are 764 nanoseconds slow of UTC, which is pretty acceptable unless you're running a high precision science experiment like, say, the Large Hadron Collider. 💥

NTP in AWS: adjusting the defaults

So out of the box we have a well-configured NTP server which has a very close source (less than 2 milliseconds for a round trip) and enough other sources to demonstrate that the close source is in quite good shape, keeping our system clock within a proverbial bee's whisker of UTC. 🐝

In that case, we could choose to use the default configuration that comes with our AMIs, and basically forget about time synchronisation. And to be fair, many (most?) applications could do this and never notice. But there are a couple of reasons why we might want to think about some tweaks.

Which NTP servers?

Firstly, those first few peers after the hypervisor endpoint are a little far away (130+ milliseconds one way), and our accuracy is constrained by that distance. So let's pick some closer to home, and adjust our pools to look like this:

pool 2.au.pool.ntp.org iburst maxsources 4

I'm using this configuration because I've provisioned an IPv6-only host, and the #2 pool is the only one which provides IPv6 addresses. If I were using an IPv4 & IPv6 dual-stacked system, I'd probably stick with something close to the defaults (sans those Ubuntu servers way over there in the eastern US), like this:

pool 0.au.pool.ntp.org iburst maxsources 1

pool 1.au.pool.ntp.org iburst maxsources 1

pool 2.au.pool.ntp.org iburst maxsources 2

Doing this gets us a few other sources which are considerably closer (not as close as the hypervisor endpoint, but that's to be expected):

root@i-0a4f94949b0ae3854:~# systemctl restart chrony; sleep 300; chronyc -n sources

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^* 169.254.169.123 3 4 377 2 -3013ns[-3619ns] +/- 555us

^- 2400:8907::f03c:93ff:fe5b:8a26 2 6 377 51 -421us[ -424us] +/- 24ms

^- 2401:d002:3e03:1f01::13 2 6 377 52 +997us[ +994us] +/- 8912us

^- 2401:c080:2000:2288:5400:4ff:fe45:cef1 2 6 377 52 +216us[ +214us] +/- 44ms

^- 2404:bf40:8880:5::14 1 6 377 51 +87us[ +85us] +/- 2670us

When is a second not a second?

The second reason (see what I did there? 😉) why we might or might not want to accept the default NTP settings is Amazon's use of leap smearing. But in order to understand leap smearing, we need to understand leap seconds.

The metric second is defined in terms of a particular number of vibrations of a Caesium-133 atom ⚛️, but the rotation of the earth 🌍 (which determines the length of a day) is variable and does not match a precise number of seconds. Leap seconds were introduced to correct this difference. There have been 27 such seconds inserted into the UTC time scale since 1 January 1972. (If you're eager for more detail check out Wikipedia's articles on universal time and atomic time.)

Most of us never have to care about dealing with leap seconds on our wristwatches or wall clocks, since they aren't that precise. But as we've seen, NTP can get to within microseconds or even nanoseconds of UTC, so being off by one whole second is a big deal. ⏱️

The official way that leap seconds are inserted into the time scale is that directly before midnight on the date of the leap second (i.e. the last second in the calendar day) an additional second is counted, resulting in a minute with 61 seconds. Leap seconds can also be negative, in which case we remove the last second of the day and that minute has only 59 seconds, but we've never actually had a negative leap second - they remain only a theoretical possibility.

Whilst this doesn't sound particularly tricky, the Unix and Linux time APIs were never designed to cope with them, and always assume that there are exactly 86,400 seconds in every day. So when a leap second occurs, the Linux kernel adjusts the system clock by simply rewinding to the beginning of that second and playing it over again.

Hopefully your spidey sense 🕷️ has started tingling about now and you've realised that here's where things could get messy. And in fact they have, on multiple occasions in the past, due to software and hardware bugs. 🐞

Leap second smearing

Because it's difficult and expensive to fix a bug on every piece of affected hardware and software for something that might only happen every few years, a number of companies (including AWS) came up with a less disruptive mechanism for inserting leap seconds: the leap smear.

When AWS does a leap smear they stretch out every second in a 24-hour period covering 12 hours before and 12 hours after the leap second. In that case, each second actually becomes 1.000011574 seconds (1/86,400th more than a second).

This means that the clocks on your EC2 instances and containers never jump backwards by a whole second, and any buggy software on them will continue merrily on thinking that everything is normal. But just before the point of the leap second their clocks will be nearly 500 ms ahead of UTC, and just after the point of the leap second they will be nearly 500 ms behind.

This might suit your application very well, or it might be unacceptable because of your legal or contractual obligations. If leap smearing is non-viable for your application, using the AWS hypervisor endpoint is a non-starter and you'll need to take explicit steps to remove references to it.

Another point to note on leap smearing is that different companies implement it differently (or not at all, as we'll see in a moment). There is no standard for leap smearing, prompting one timekeeper to comment that those who imposed it on the world without getting it standardised “should all be sent to bed without dinner.”

Azure

Let's turn to Azure now. NTP actually works much the same on Azure as it does on AWS, despite time being served via a different mechanism and the cloud using a different VM hypervisor.

Services in scope

As with AWS, there are various services based on virtual machines and containers:

- Azure Virtual Machines

- Azure Kubernetes Service (AKS), also based on Azure VMs

- App Service, a Platform-as-a-Service not dissimilar to AWS Elastic Beanstalk

- Container Apps, a container service based on AKS, but where Azure manages the control plane on the customer's behalf

- Container Instances, seemingly the closest equivalent to Fargate in Azure's container offerings

NTP in Azure

If we need to configure NTP in Azure we do it in the usual fashion, by adjusting the configuration files for our chosen NTP service. If you use a distribution which configures time synchronisation by default, you'll probably end up with something pretty reasonable. Microsoft also provides some starting advice for NTP configuration in Azure, although as at this writing it hasn't been updated for more recent Linux distributions.

Local PTP device

The primary difference between AWS and Azure time sync is that whilst AWS provides a network-accessible NTP endpoint, Azure provides a locally-accessible PTP (Precision Time Protocol) device within each VM through the hypervisor.

As we've already seen, adding more sources allows NTP to validate the quality of its time, and Azure is no different in this regard. So we will generally follow the same pattern of using the host hypervisor, and also augmenting it with external sources.

Not leap smeared?

The Azure hypervisor PTP device is probably not leap smeared. I say "probably", because despite searching for it on numerous occasions I've been unable to find definitive information confirming this. The closest I have found is this post on the Microsoft forums, which states:

Leap second smearing is not UTC-compliant and as such, Windows does NOT implement leap second smearing.

My assumption is that what applies to Windows also applies to Hyper-V when running Linux guests. If you know of any definitive information to confirm or deny this, please reach out.

PTP device: pros & cons

The difference between the local network NTP service (as found on AWS) and a local PTP device (as found on Azure) is that the PTP device can be accessed much more quickly, because it bypasses the networking stack. On the other hand, with the PTP device we lose visibility of the reported stratum, reference id, root delay, and root dispersion that NTP provides, so the NTP service cannot assess the quality of that source.

The PTP device is supported natively by chronyd, but not by ntpd. (If you

want to use the latter you'll also need the phc2sys daemon from the linuxptp

package, and connect it up using the shared memory driver in ntpd.) The PTP

device is also usually not accessible in containers, so it's not possible to

monitor their time quality via this method.

Testing the services

So now that we know how NTP works at a fundamental level in AWS and Azure, let's take a look at the relative quality of the clocks in each of the different cloud services we've mentioned above.

Experiment parameters

Method

To test time synchronisation in AWS and Azure, I set up to run an experiment in each different service. The method was:

- run chrony with logging enabled for 12-24 hours on several different instances of the same service (if possible)

- gather and parse the logs, load them into InfluxDB for analysis and visualisation

- repeat this process in AWS and Azure with VMs and several container options

Goals

In terms of goals, I set some relatively modest parameters:

- offset, root delay, and root dispersion should all be ± 10 milliseconds maximum, but ± 1 ms is preferred

- all of these, along with frequency error, should have relatively little variation over time

Caveats

This experiment comes with the following important caveats:

- I was working with a restricted budget and account limits (particularly in Azure, where I was using a trial account with very low limits), and sometimes by the nature of the service (some don't allow easily scaling out to multiple instances).

- It's impossible to predict and cover all the hardware on which a workload could run. AWS has 606 different available instance types in ap-southeast-2 as I'm writing this, and I only tested three of them.

- This is not statistically valid due to the above-mentioned sample sizes, but in a couple of cases it probably came close to a reasonable level of confidence.

- It represents a snapshot of the time sync quality of the clouds at the point of my experiment and doesn’t take into account any improvements they might have made recently. The original AWS EC2 and Azure VM measurements were taken between December 2021 and January 2022, and the container measurements were taken in January 2023. Generally, I would expect things to have gotten better rather than worse since then.

⚠️ Graphs ahead!

“This is a graph, therefore it’s science.” -- Richard Turton

Seriously, if you thought we had a lot of graphs in part 1, there are a lot more in this part. Non-visually oriented readers may want to skip ahead to the takeaways. Note that the vertical (and sometimes horizontal) scale varies from graph to graph, so please keep that in mind when interpreting them.

Before we look at the results of my cloud experiment, let's take a quick level-set by looking at the same measurements on some low-end bare metal hardware. The idea here is to get a feel for how much being inside a VM or container impacts time sync.

The test hardware is a lightly-loaded HP MicroServer Gen8 on my home LAN, with a Xeon E3 CPU. It lives in a non-air-conditioned environment, but on a voltage-regulating UPS. Remember what we said about hardware clocks being made out of physical materials? Temperature, voltage, and even CPU load can all affect time sync accuracy.

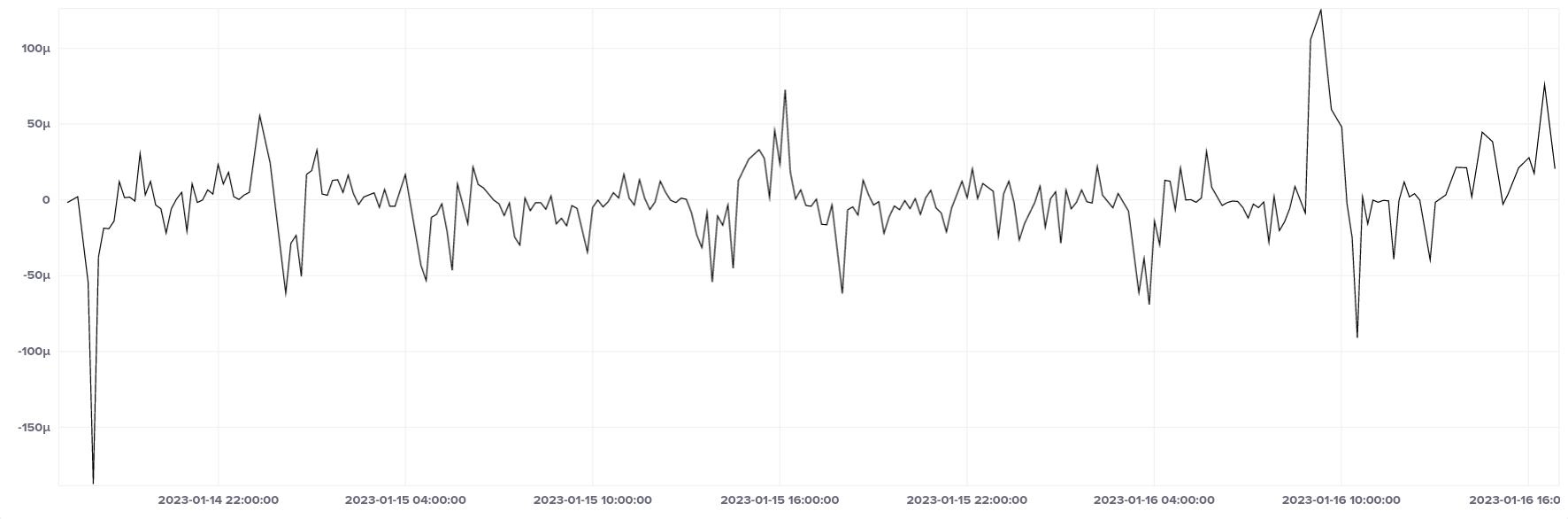

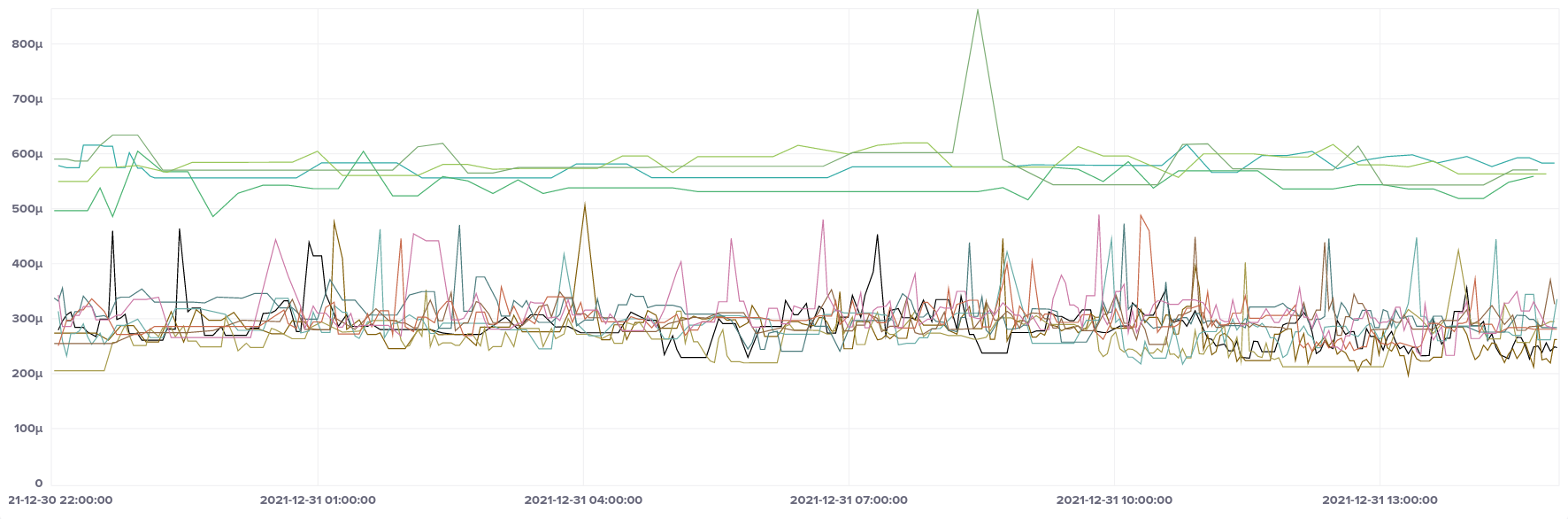

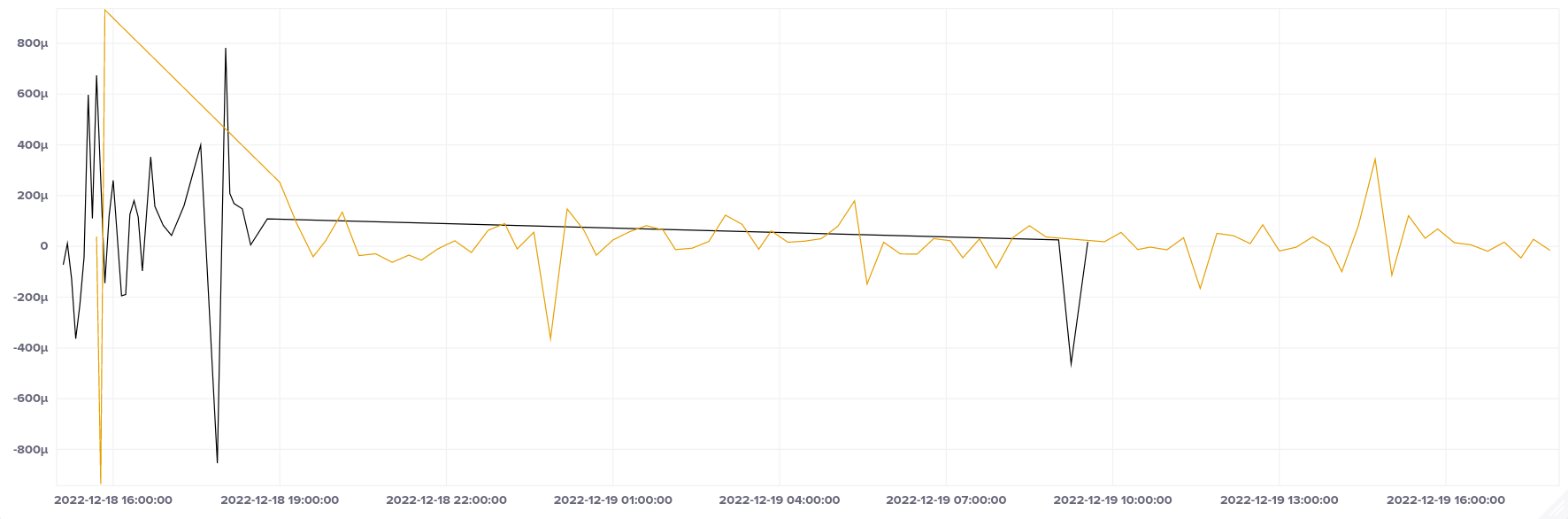

Offset

Here's the median offset of the bare metal server over a roughly 1-day period. I should add that this test was done at the height of a Queensland summer, so the temperature and humidity were likely very high compared with most data centre environments. The range varies between approximately 130 microseconds ahead and 200 microseconds behind UTC, but spends most of its time within ± 50 µs.

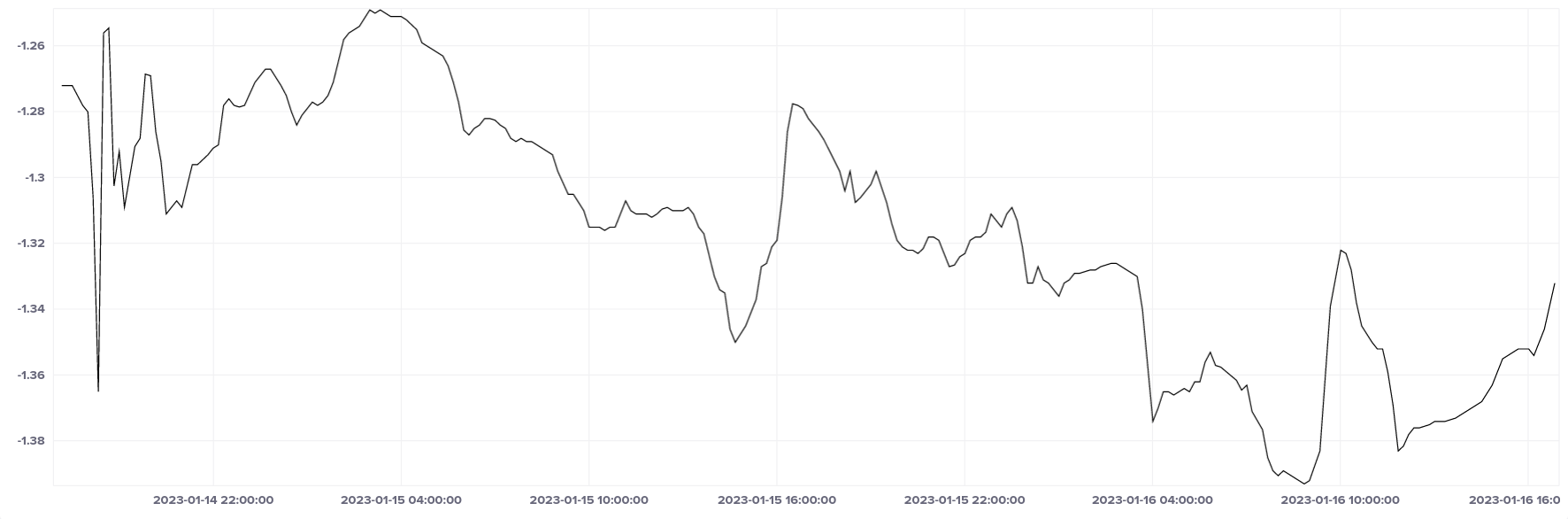

Frequency

Frequency over the same period looks like it's rather uneven, but actually all the values are between -1.40 and -1.24 ppm, so it's a reasonably stable clock.

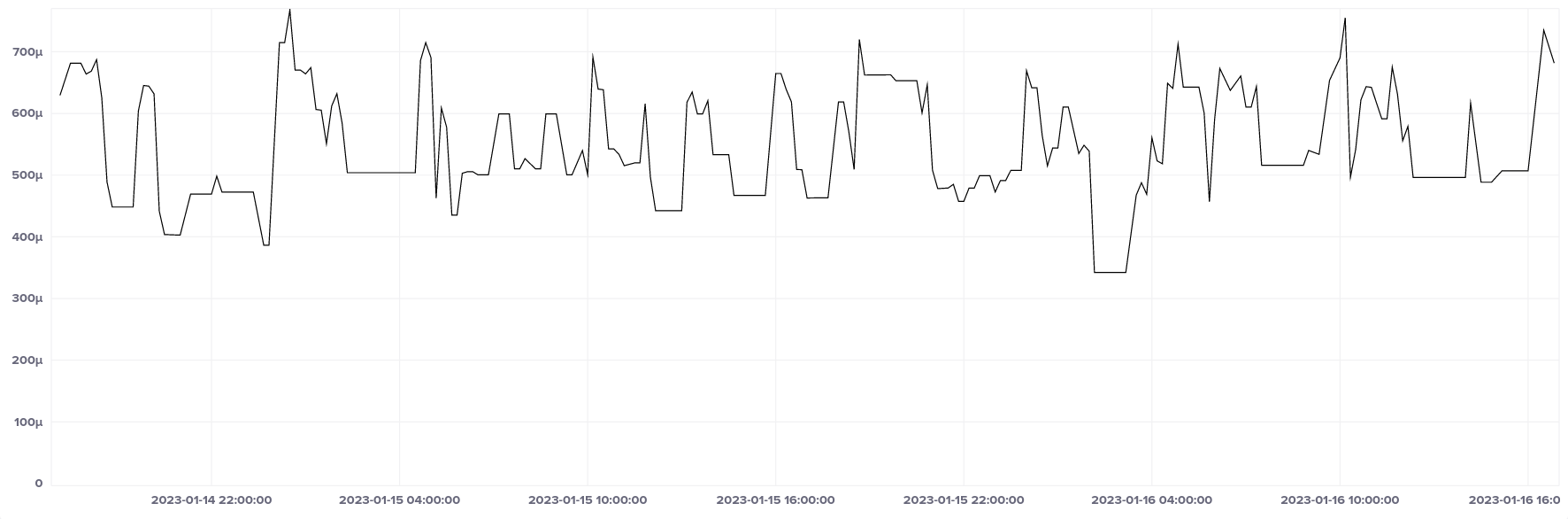

Root delay

Our host is one network hop away from its closest stratum 1 source on a 1 Gbps Ethernet connection. Best root delay is around 350 µs, worst around 800 µs.

So hopefully that sets some context for our cloud experiments. The hardware in question is not particularly powerful or modern, but it easily meets our goals for offset and frequency.

OK, bring on Mister Cloud!

AWS EC2

I tested several different types of EC2 instance, all in the T family of burstable CPU instances, with AMD, ARM, and Intel CPUs all tested. None of the instances used up its burst CPU allocation during the experiment, so they would not have been throttled by AWS. (NTP is a very CPU-efficient protocol, so you're never likely to encounter it causing any problems with system load.)

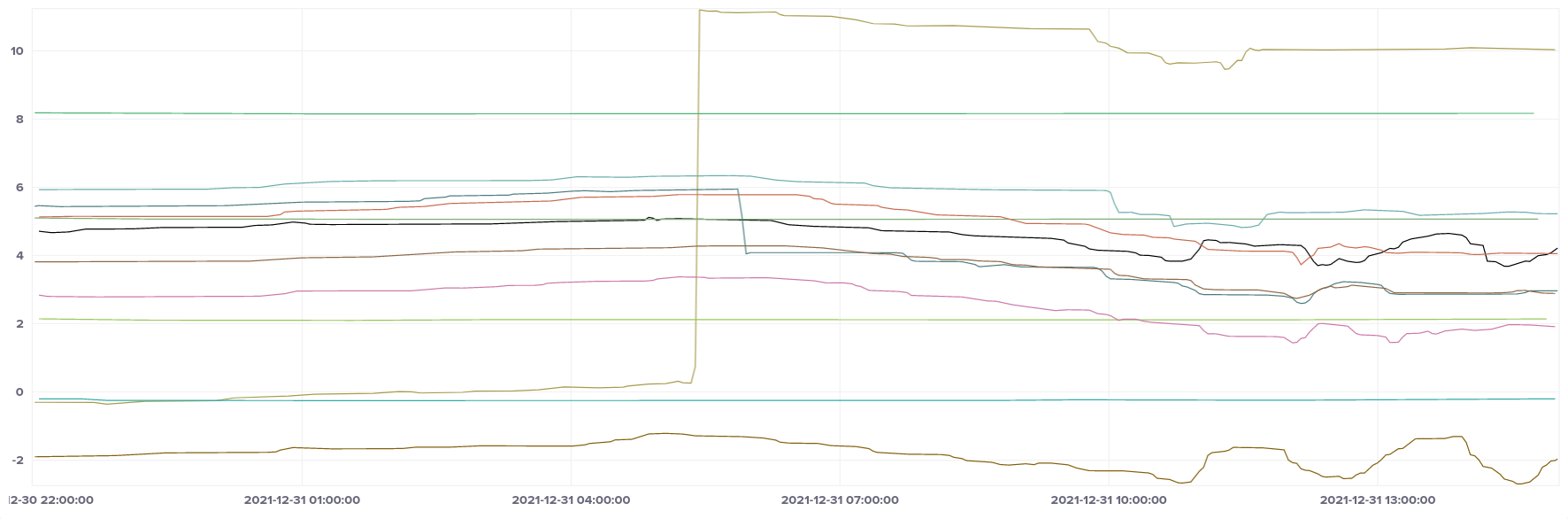

Offset

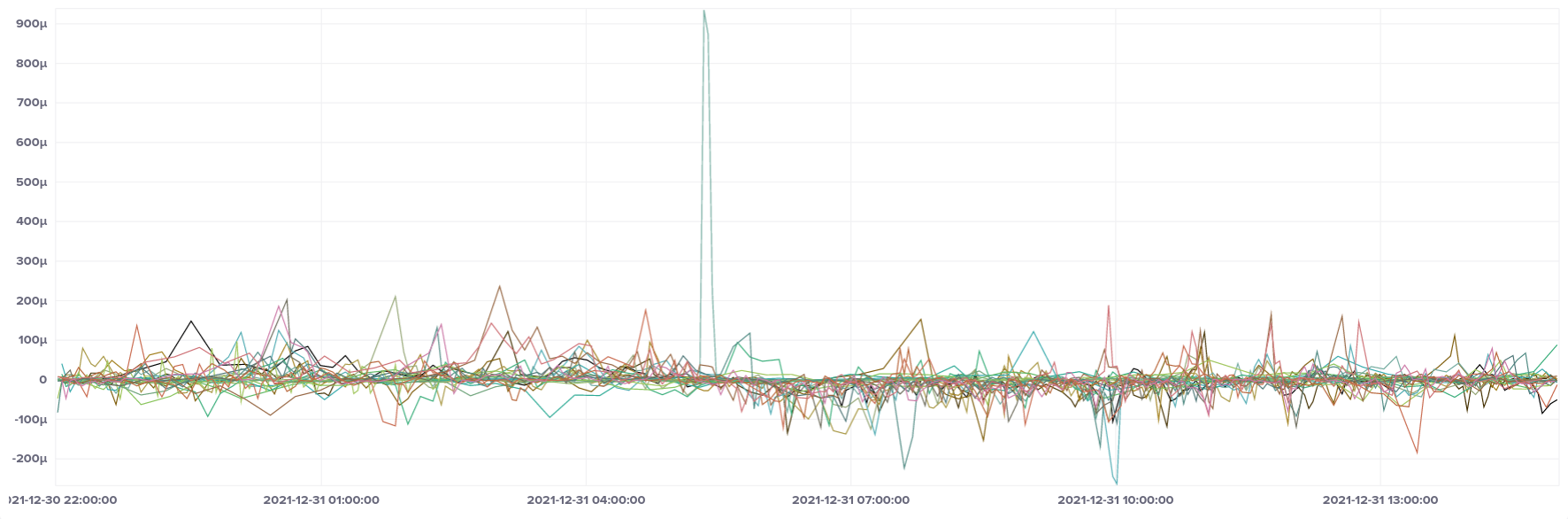

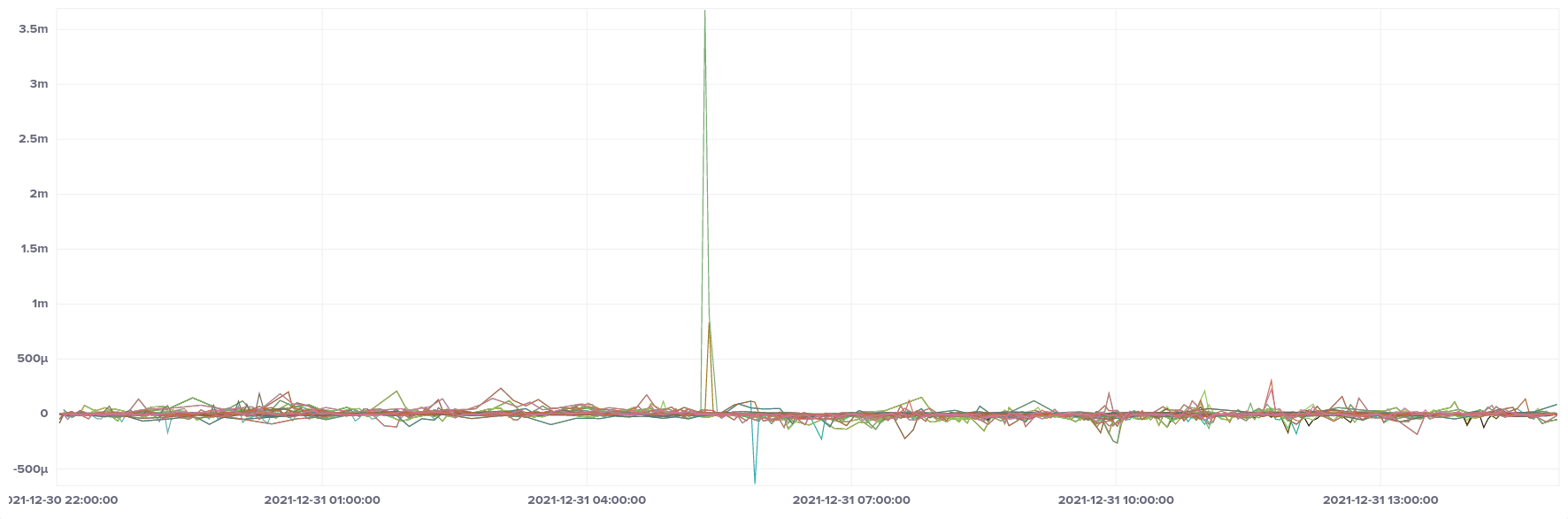

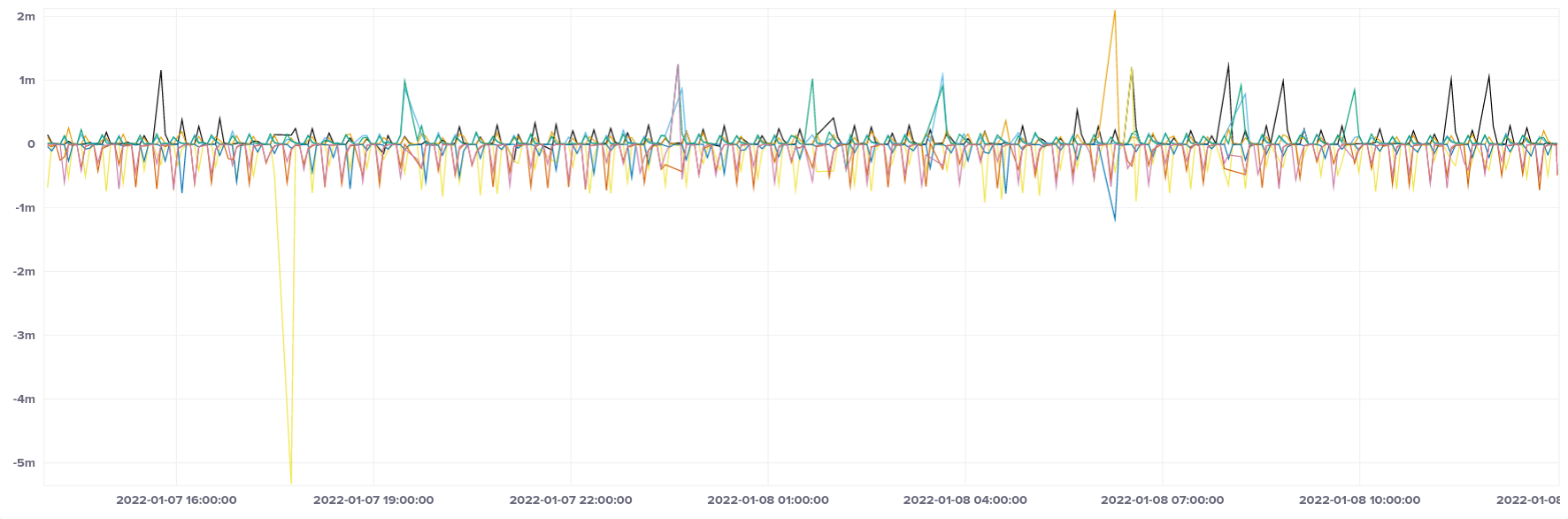

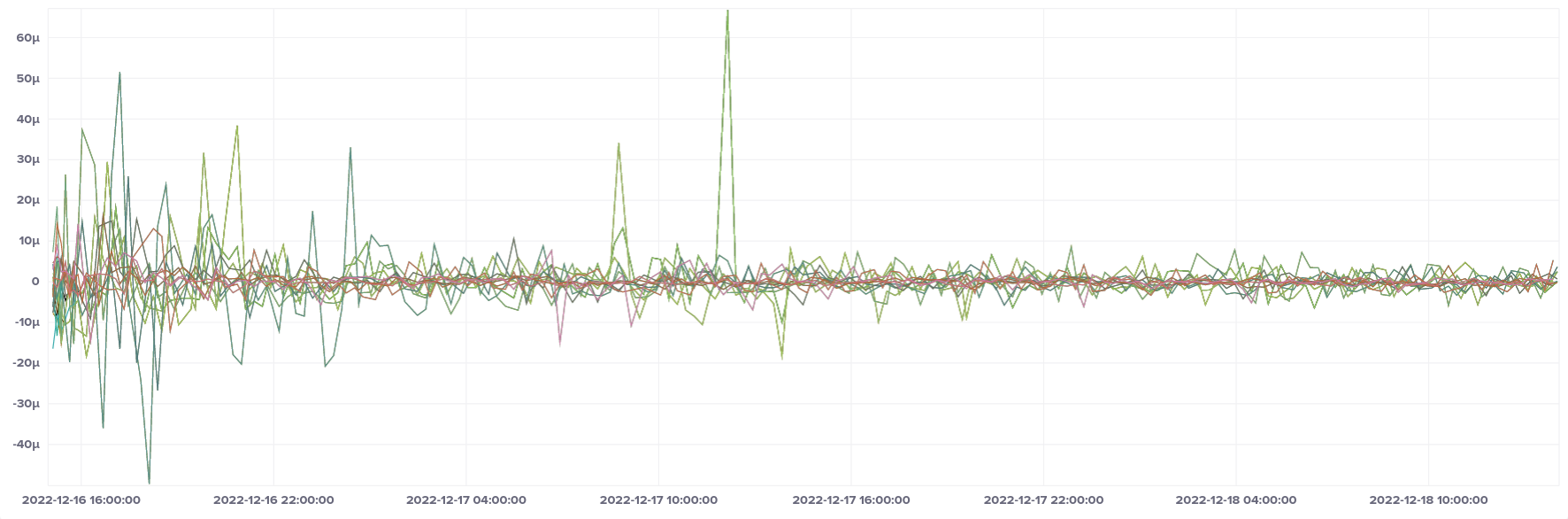

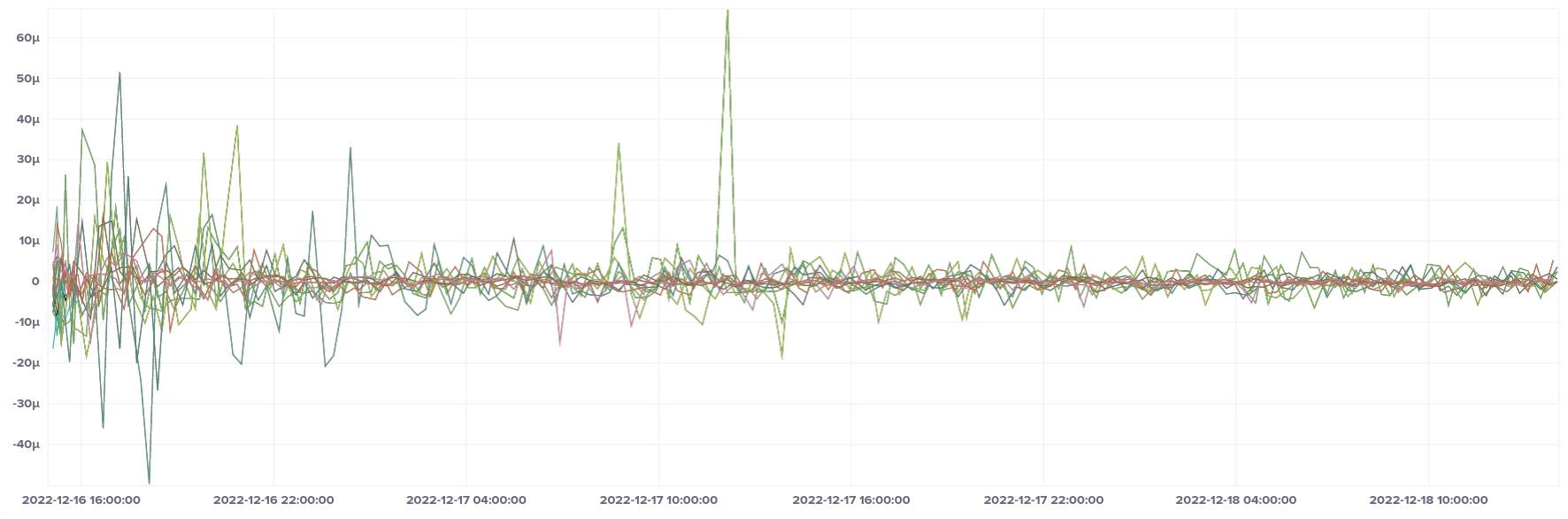

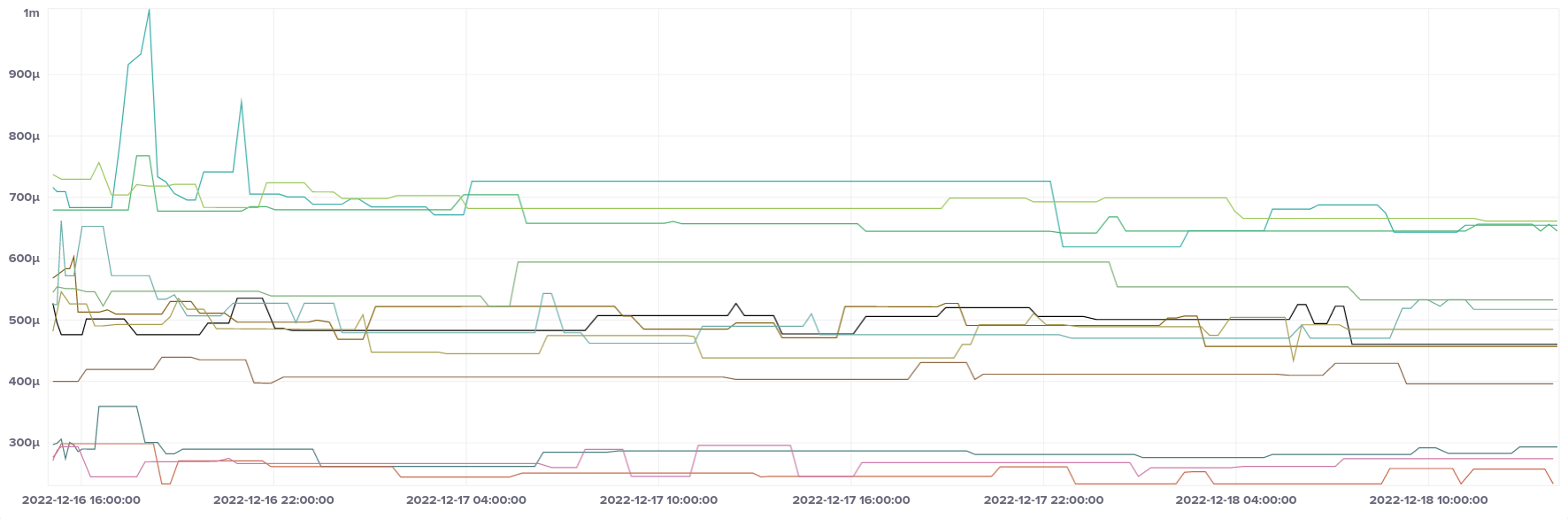

Here's the median offset of our full selection of EC2 hosts:

Apart from one spike in the middle of the graph, everything looks pretty good, falling roughly into the ± 250 µs range, with most samples also falling into the ± 50 µs band, just like our bare metal host. That spike by one instance in the middle of the graph led me to take a look at maximum and minimum values as well:

So the extremities of the graph are a bit further out than we'd like, but they're very much outliers, with the majority of samples still in our preferred band.

Breaking it down by CPU type, we can see that the large spike only affected ARM instances (t4g instance type), whereas AMD and Intel had no such anomalies.

ARM (t4g)

AMD (t3a)

Intel (t2)

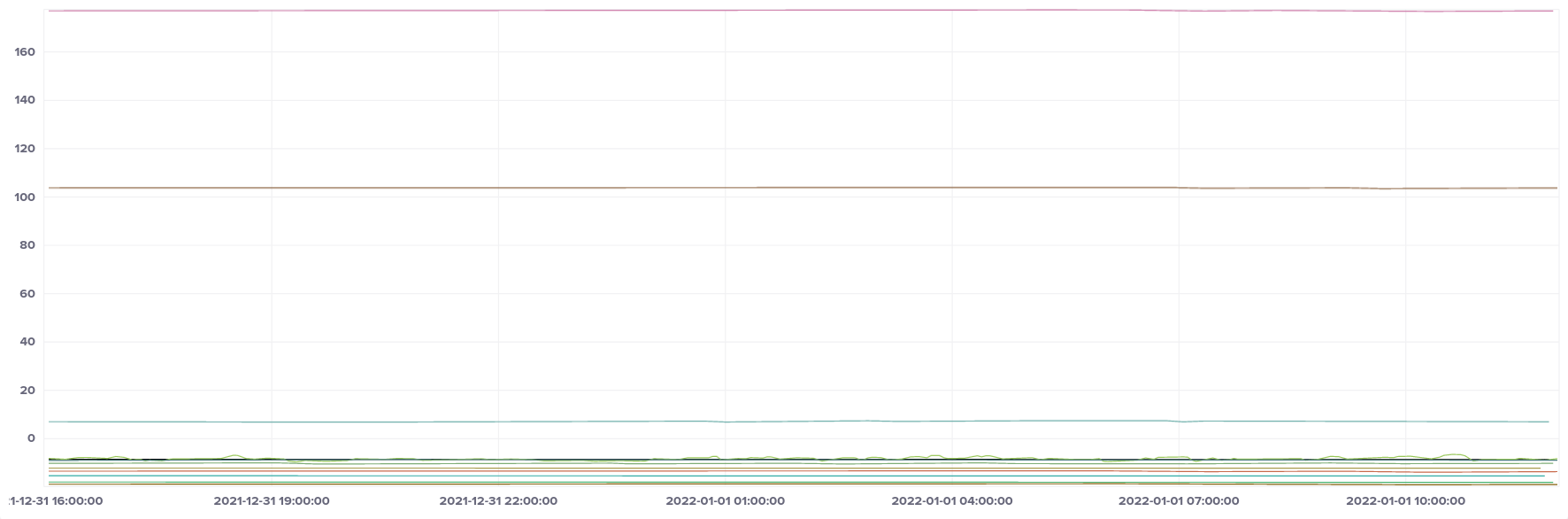

Frequency

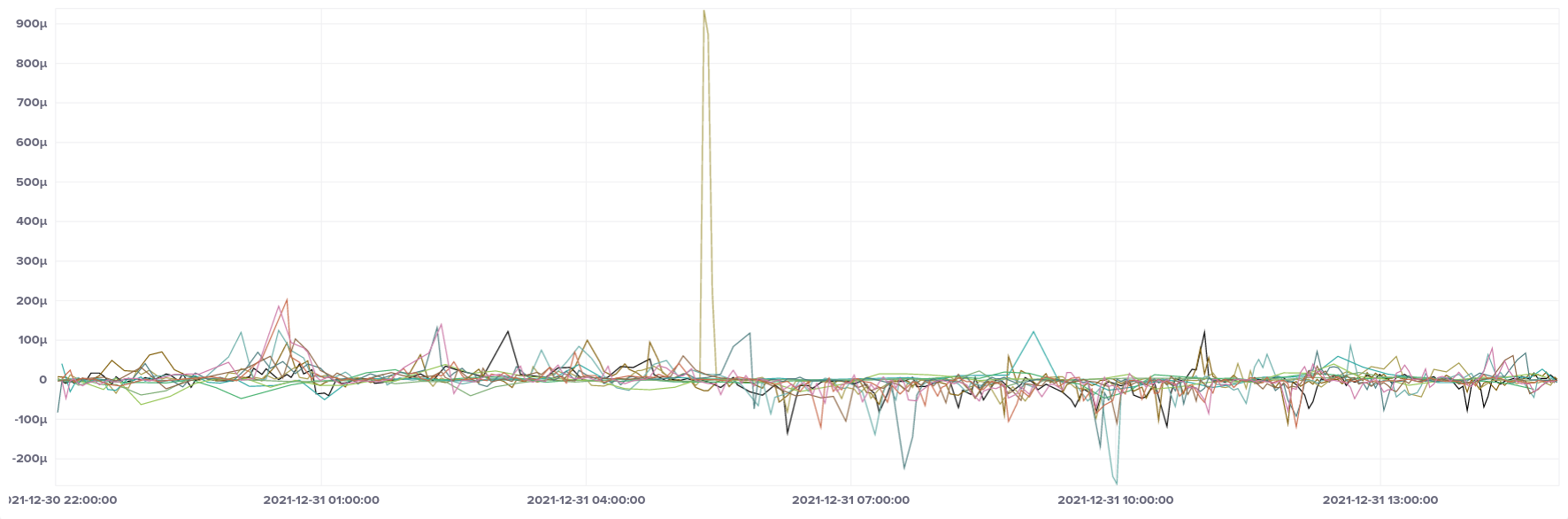

Let's take a look at frequency error, broken down by CPU type.

ARM (t4g)

There are a couple of large jumps in frequency on the ARM instances, which are likely the cause of the offset spikes. This may have been caused by the instance being live migrated to another physical host, although that's only a guess on my part.

AMD (t3a)

AMD CPUs have a much larger range in magnitude of frequency error, but each instance is quite stable, except for one that seemed to float around a bit (light green in colour, just below zero on the Y axis).

Intel (t2)

Intel CPUs seem to be the least variable in terms of frequency, and fall into a narrower band of values than their AMD counterparts (although not as narrow overall as ARM).

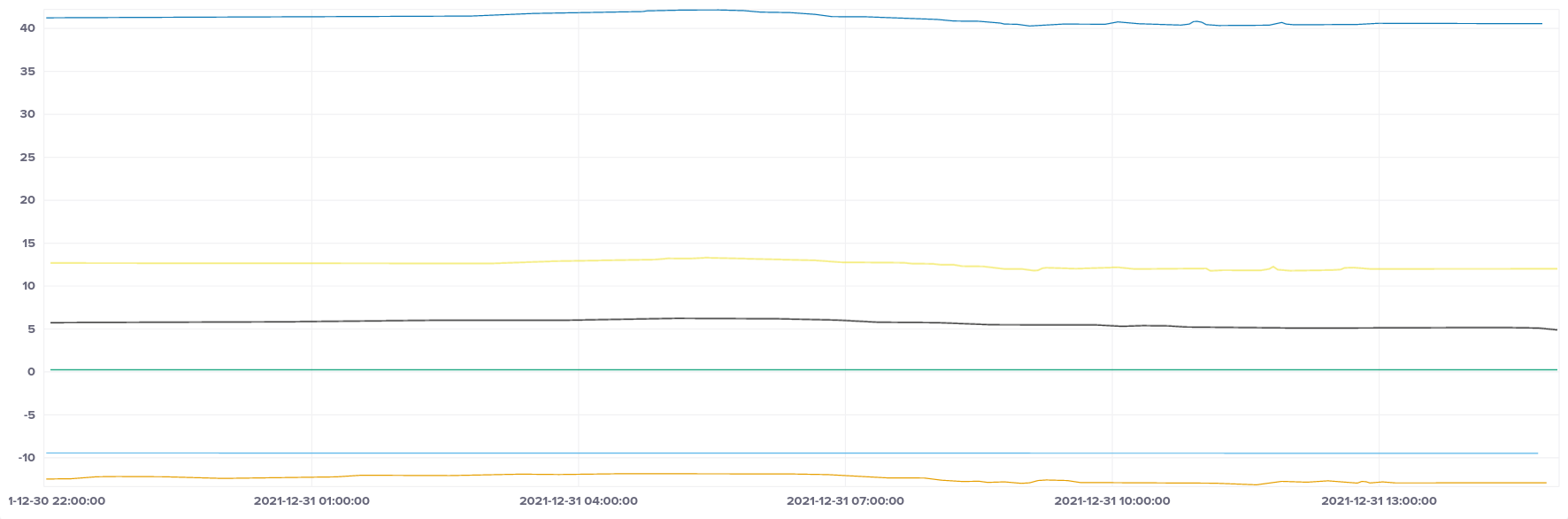

AZs - root delay

One interesting data point shows up when looking at root delay: depending on the AZ the instance is deployed in, the root delay falls into different bands. Here's the ARM CPU data showing this - there were 9 instances deployed, and the 3 in AZ B showed a minimum root delay of 500 µs, whereas those in AZ A and C were as low as 200 µs, always staying in a band below their AZ B counterparts. My best guess is that either AWS did not deploy a stratum 1 NTP server in the data centres comprising AZ B, or that it was offline at the time I ran the test. (This doesn't compromise the offset, as we can see from the fact that there's no noticeable banding in the corresponding offset graph above.)

Reboots

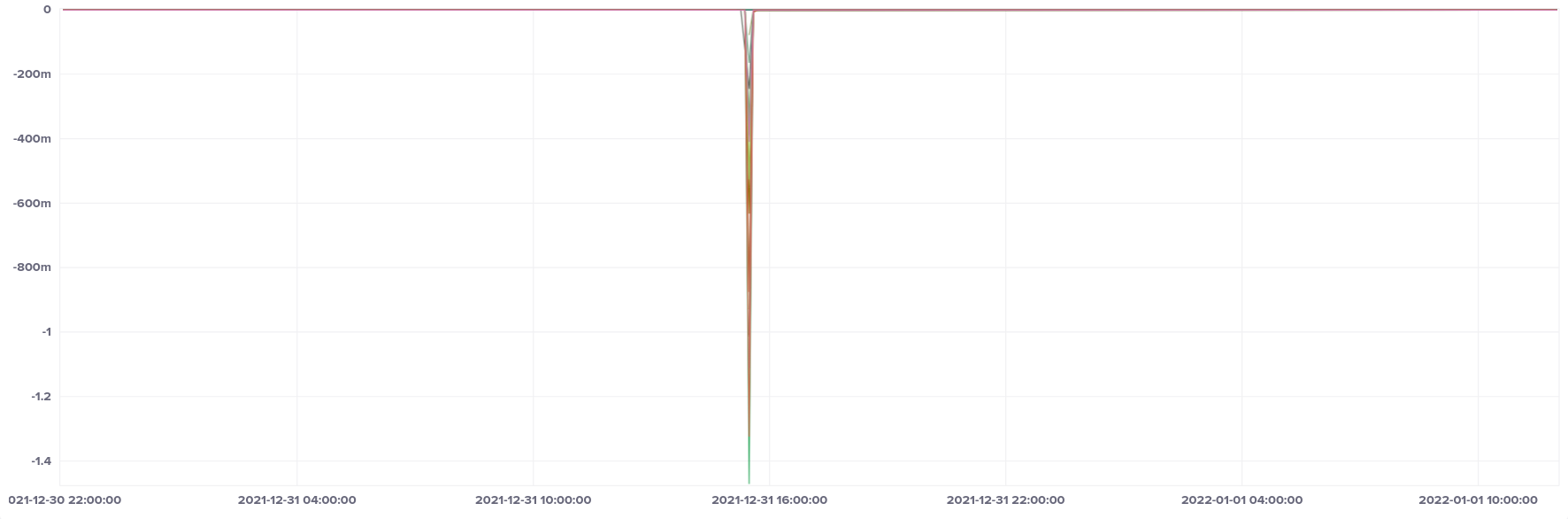

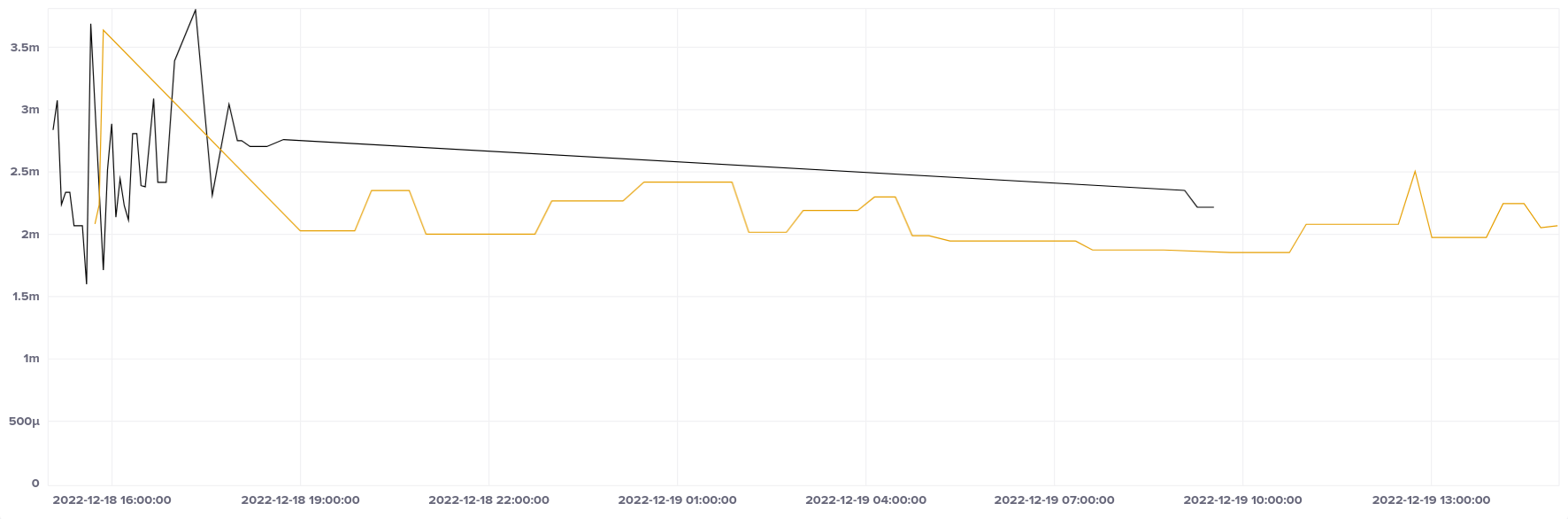

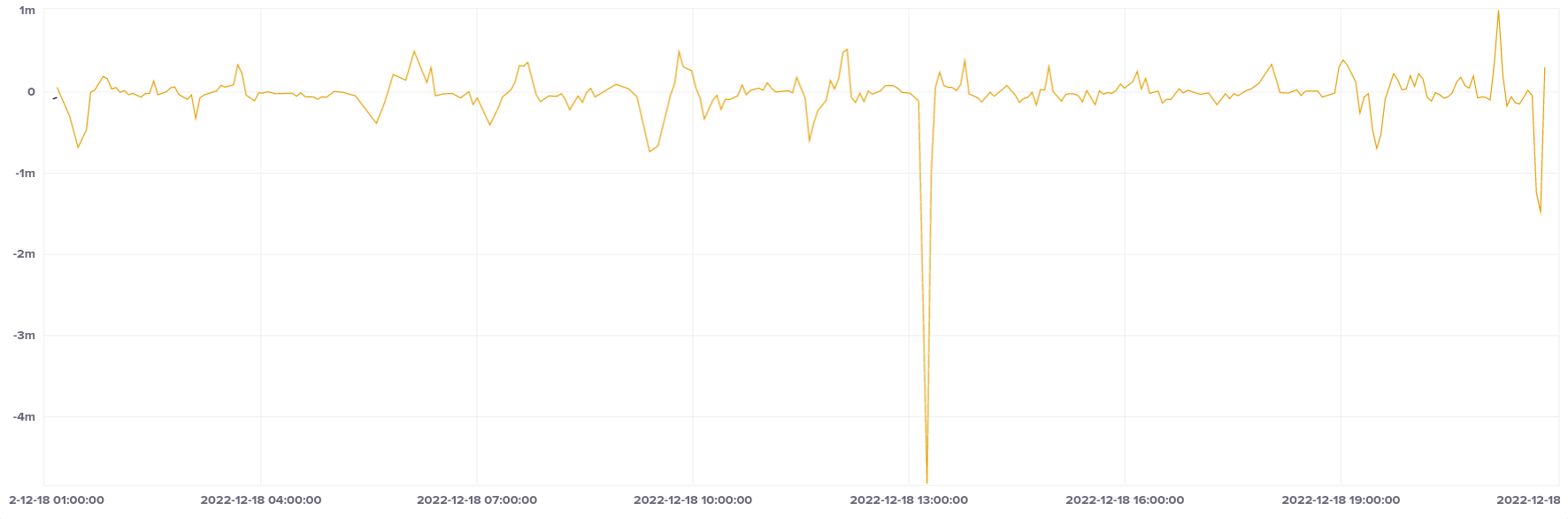

All of the results we've looked at so far are from a continuous test, where chrony was running constantly. If we include a reboot of the instance in our measurement period, we see quite a dramatic difference. Here's the median offset showing a reboot:

Note the scale - our instance clocks are up to 1.5 seconds behind after a reboot. All of the variations we saw in the other offset graphs (including the outlier on the ARM graph) are buried in that line at zero on the Y axis compared to our reboot.

Azure Virtual Machines

Let's take a look at the situation for virtual machines over in Azure. At the time only Intel CPUs were available in the Azure Australia East region.

For this and all the remaining tests, I haven't included frequency error, because it was pretty stable on all platforms tested, and honestly wasn't very interesting to look at. This isn't surprising, given that the CPU architectures are mostly uniform, and both AWS and Azure likely have well-controlled physical data centre environments in terms of temperature, humidity, and voltage.

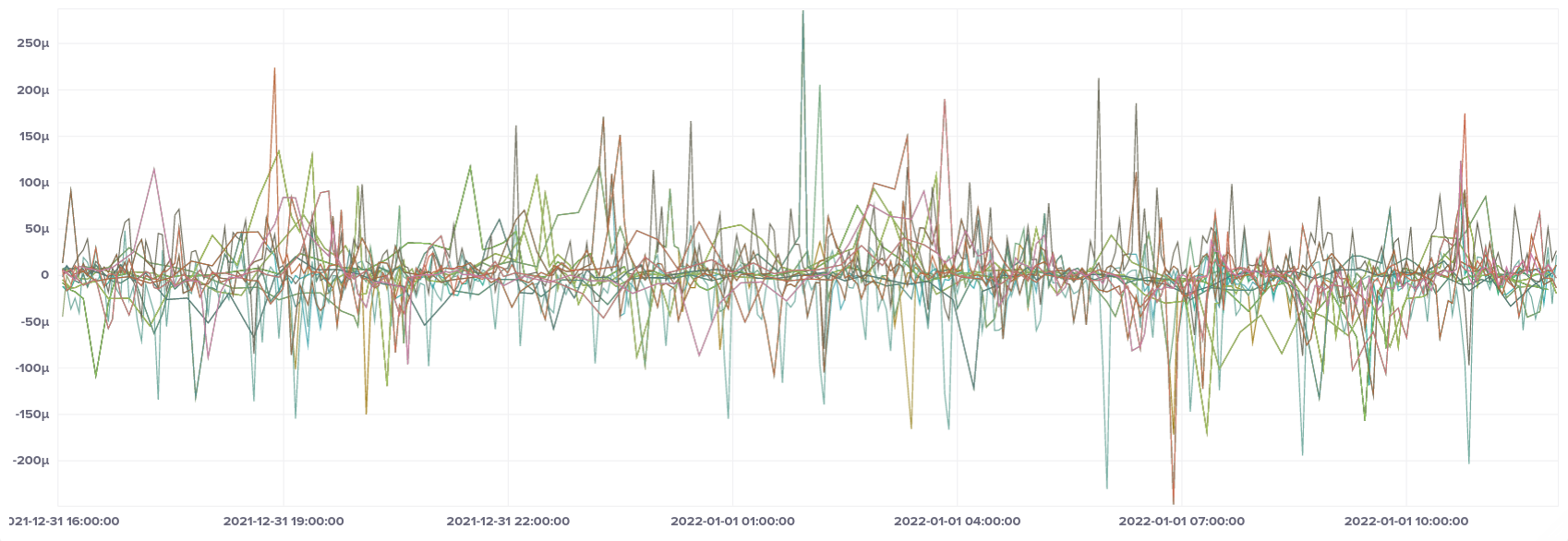

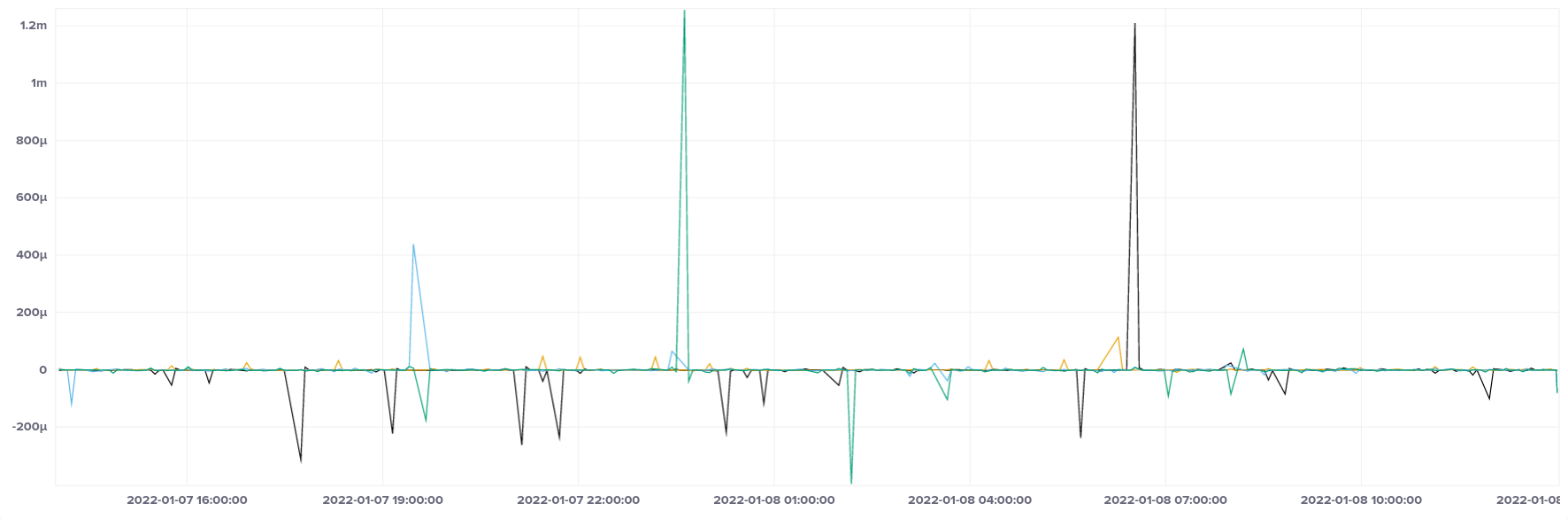

Offset (median)

In terms of offset we see a somewhat more spiky graph than AWS EC2, with two large outliers, but with most samples falling into the ± 200 µs band:

Offset (min & max)

If we look at the minimum and maximum offset, we see a little bit of a different story. Generally values are still falling into our preferred range of ± 1 ms, but there are suspiciously regular spikes at 15-minute intervals, which leads me to suspect that there's a regular process on the hypervisor which ties up the CPU momentarily. These instances were spread across availability zones, so there is no reason to suspect that they're being affected by noisy neighbours, although we can't rule that out.

AWS Elastic Beanstalk

During my testing I failed to get Elastic Beanstalk to run my container, so was unable to obtain detailed logs for analysis. During troubleshooting I did log into the EC2 host and confirm that it was using a default chrony configuration for Amazon Linux, which should share the basic characteristics of EC2 above. Due to time constraints and the fact that I've never encountered a customer running Elastic Beanstalk in production, I didn't invest a lot of time in getting this working.

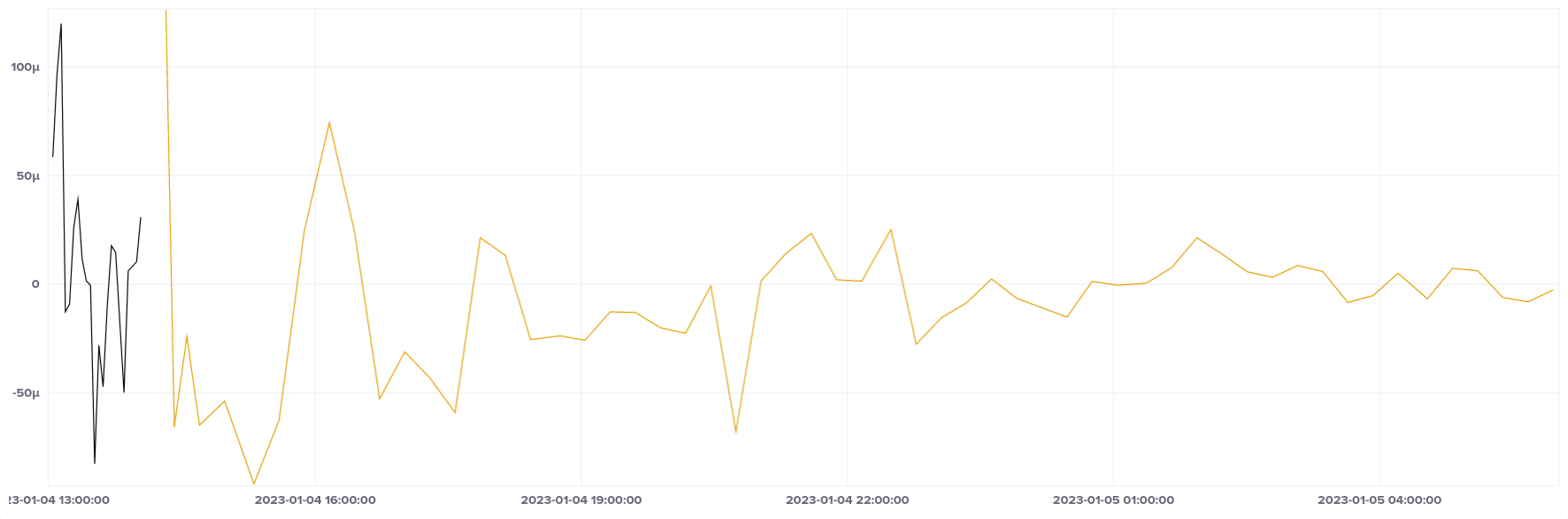

Azure App Service

With Azure App Service we're running the test in a container, so we can't read the PTP device. So our offsets reflect chrony's selected sync source at the point of each sample. This switched back and forth between a couple of the configured sources at various times over the lifetime of the instance (hence the two lines on the graphs), but settled down after about 3 hours of running the container. All samples fell within our goal range.

Because we need to go outside Azure to get to our sync hosts, our root delay is necessarily larger than it would otherwise be, but still quite reasonable:

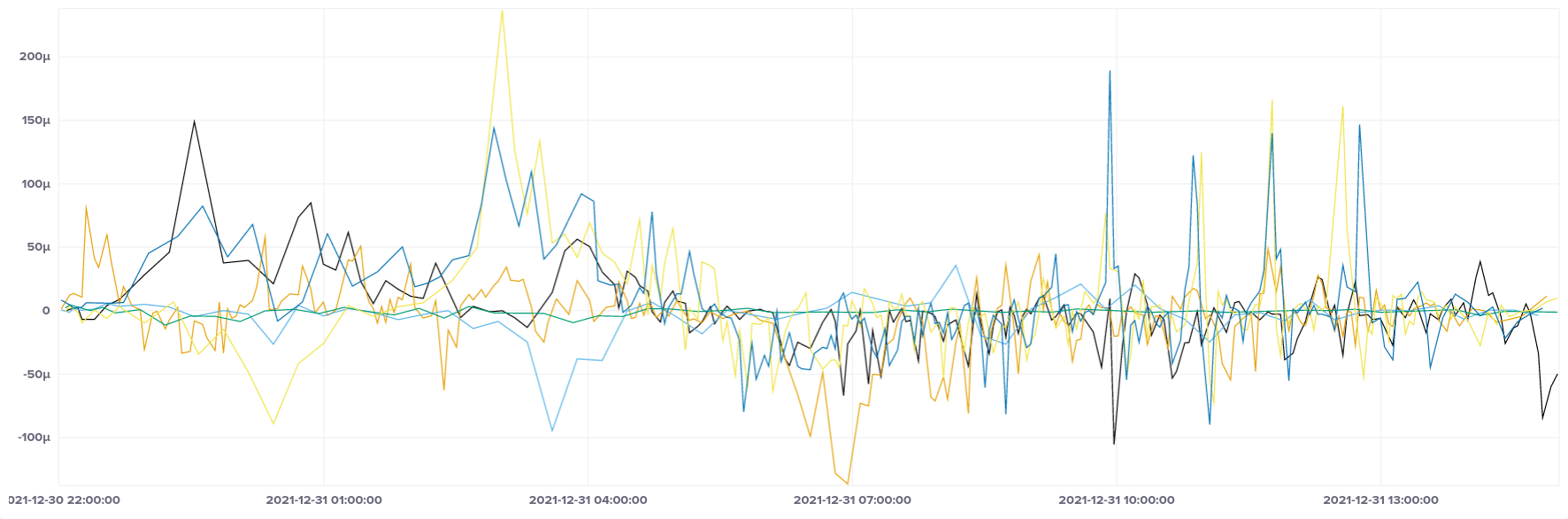

AWS Fargate

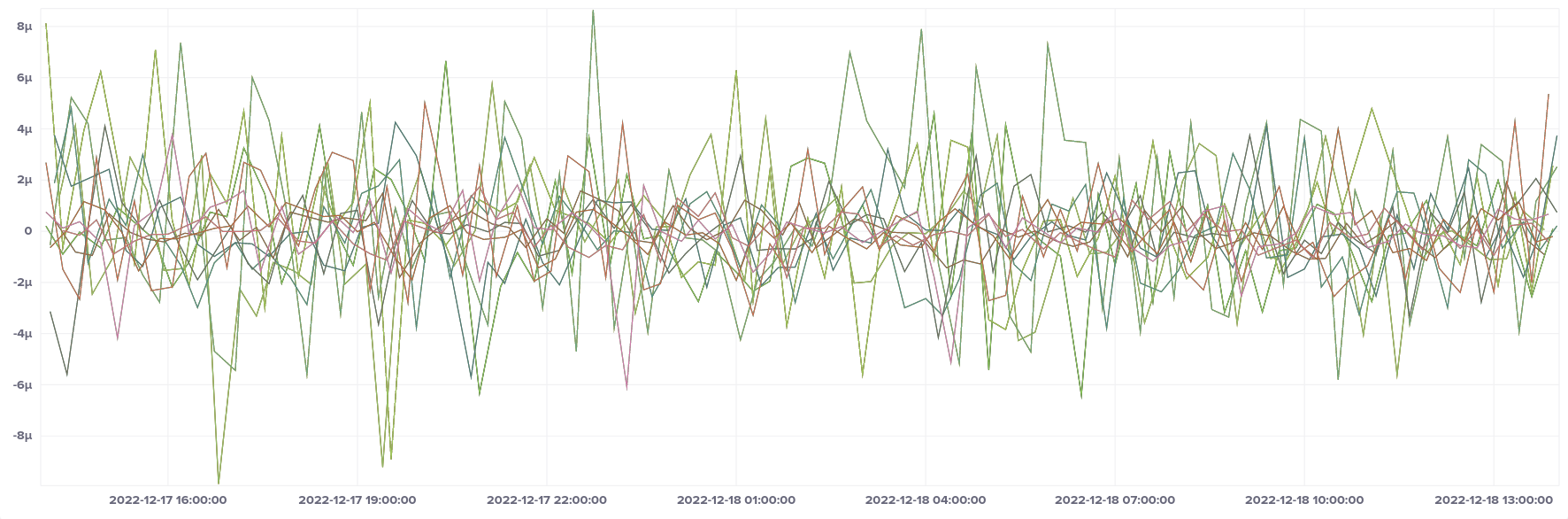

Moving on to ECS/Fargate, we see very reasonable median offsets, with no values outside ± 70 µs.

Looking at minimum and maximum offsets, they look essentially the same. Fargate showed no problems with outliers.

Zooming in on the second half of that offset graph, a 24-hour period, we see the band become even more tightly grouped, all within ± 10 µs.

Fargate shows similar groupings in root delay depending on which AZ it is deployed in. During my testing three distinct bands showed in root delay. I'm not sure whether this means that there was only one stratum 1 server available across all three AZs, or whether there are just slightly different network topologies in some AZs. But like EC2, it had no noticeable effect on offsets.

Overall, Fargate has the most accurate clocks of any service I tested. The efficiency goals of the Firecracker micro-VM service really seem to have been reached when it comes to clock accuracy.

Azure Container Apps

Azure Container Apps is a Kubernetes-powered container service. This was one of the more limited tests due to service limits on trial accounts. Container Apps had a couple of offset outliers, but largely stayed within our ideal band. Like other container services in Azure, we can't access the PTP device.

Azure Container Instances

Azure Container Instances was also a limited test due to service limits, and again we can't access the PTP device. It seems to be the lowest overhead Azure container service, based on the low minimum and maximum offsets.

Takeaways

So now we've had the opportunity to survey time sync in the different services in AWS and Azure, let's wrap up with a summary and some practical advice.

Findings

Our first general observation is that the overwhelming majority of samples on all of the tested services fell well within the bounds of our (admittedly arbitrary) 1 ms limit. So, for the majority of applications which are not time-sensitive at sub-millisecond precision, NTP works fine in cloud VMs and containers - even in default, potentially non-optimal configurations.

And secondly, AWS VMs and containers were generally more consistent in my tests than their Azure counterparts, with Fargate offering accuracy superior to all other services tested.

General recommendations

-

When it comes to time sync configuration in the cloud, the most important starting point is to know whether or not your workloads are time-sensitive, and what level of precision you are expecting out of your NTP implementation.

-

If you aren't sure whether or not your workloads are time sensitive, then enable statistics in

chronydandntpdregardless. You probably won’t notice time sync problems until it’s too late to gather data, so be proactive and turn them on from the outset if you aren't sure.- For

chronyd, enable at least themeasurements,statistics, andtrackinglogging options - For

ntpd, enable theclockstats,loopstats,peerstats, andsysstatsfilegen options

- For

-

-

Be aware of the impact of reboots. If you have time-sensitive workloads, ensure that they are configured to depend on the NTP service before they start, and don't assume that just because the service is started that time sync has achieved any particular offset - check the offset by querying the NTP service first.

-

Don’t mix leap smeared and non-smeared time sources. Otherwise, you'll be in for some pain when the next leap second comes. The good news is that leap seconds are going away in 2035. But the bad news is that we'll need to come up with some other way to reconcile atomic time with astronomical time (I personally like the idea of leap hours every 6000-7000 years). I plan to be retired before either of those things happen, so good luck sorting that out, everyone. 😃

-

Familiarise yourself with RFC8633: NTP Best Current Practices, particularly the guidelines in section 3.

VMs

-

For AWS EC2 and Azure VMs, use the local hypervisor and country/region public pools as sources (bearing in mind the leap second caveat on EC2 hypervisors).

- If some of your EC2 hosts do not have direct Internet connectivity (very common in application and secure subnet tiers), provide one EC2 instance per AZ in the public subnets to connect to lower stratum hosts. Configure EC2 instances in the application and secure subnets to use the instances you created in the public subnets as sources in place of the public pools (and in addition to the local hypervisor).

-

ARM is possibly slightly less mature than AMD/Intel in time sync quality, but still very usable. If you're expecting to deploy time-sensitive workloads on ARM instances, you should perform your own measurements on the target instance types to confirm that the metrics are within acceptable ranges.

Containers

With containers, our overall goals are the same as for VMs, but because we're unable to control the system clock, we have to accept the time sync provided by the host. This makes it all the more important for time-sensitive workloads to measure the quality of the local clock and alert the system owners should your accuracy goals not be met. (You might want to check out NTPmon, my NTP monitoring utility, to help you with this, or if you’re using Fargate, monitor the clock metrics in the task metadata endpoint.)

In terms of the services tested, Fargate is a clear winner and Azure App Service the least preferred in terms of clock accuracy, although if you don't require sub-millisecond precision, all of the container services measured here work fine.

That's all, folks!

I hope this series has helped you to understand when and why time synchronisation is important, and provide a starting point for configuration and investigation of the time sync issues you’re likely to encounter in AWS and Azure. If you have any questions, comments, or corrections, feel free to reach out via the contact form on this web site.

I’d like to thank Mantel Group, who allowed me to spend work time writing much of this blog series, along with all the colleagues who provided feedback. Special thanks to Sarah Pelham for her enthusiasm, dedication, and eye for detail.

Credits/Resources

- NTP docs

- chrony

- gnuplot - used for making the analogue clock graphs

- InfluxDB - used for collecting and graphing the NTP sources

- RFC8633: NTP Best Current Practices

- IETF NTP resources, including published & draft RFCs

- IETF NTP working group mailing list

- Interesting background on the history of timekeeping, and measurement of unsynchronised clocks on the Internet, by Geoff Huston

- ServerFault NTP tag

Related posts

- What’s the time, Mister Cloud? An introduction to and experimental comparison of time synchronisation in AWS and Azure, part 1

- What’s the time, Mister Cloud? An introduction to and experimental comparison of time synchronisation in AWS and Azure, part 2

- AWS microsecond-accurate time: a second look

- AWS microsecond-accurate time: a first look

- What's the time, Mister Cloud? The soundtrack