Computer Timekeeping

In part 1 of this series we thought about traditional clocks from first principles, and we made some basic visualisations of their accuracy. Here’s where we start to get technical and talk about actual computer clocks and the mechanisms for keeping them in sync. We’re going to cover three different use cases: bare metal, virtual machines, and containers.

Time zones

Before we go on, a quick note about time zones: there are none.

You might think I’m joking, but it’s true - in time synchronisation, there are no time zones. Linux kernel time is always UTC, and time zones are a user space problem. They’re implemented as an offset from the kernel time by a positive or negative number of seconds.

You can see this for yourself by running these commands from a Linux system (my work laptop, in this example):

$ export TZ=""

$ cat /etc/timezone

Australia/Brisbane

$ date

Thu 15 Feb 10:04:29 AEST 2024

$ date +%s

1707955472

$ export TZ=Australia/Melbourne

$ date

Thu 15 Feb 11:04:46 AEDT 2024

$ date +%s

1707955488

$ export TZ=Etc/UTC

$ date +%s

1707955497

$ date

Thu 15 Feb 00:05:02 UTC 2024

$ date +%s

1707955504

$ export TZ=US/Pacific

$ date

Wed 14 Feb 16:05:12 PST 2024

$ date +%s

1707955514

Our time zone is just an environment variable (read from /etc/timezone if TZ

is not set) used to tell date and other commands that display time which

offset to use. The real time is that number returned by date +%s, which is the

kernel's second counter since midnight on 1 January 1970. As you can see, the

second counter is not changing by more than a few seconds on each time zone

change (the time it takes me to re-enter those commands) even though the output

of the bare date command is changing by hours at a time.

Types of clocks

There are three different types of clocks which are relevant to our discussion of timekeeping. We'll think about them from the perspective of bare metal systems first.

Hardware clocks

Hardware clocks are part of the electronic circuits in our computers which keep the time ticking along.

The first thing to know about hardware clocks is that they’re not clocks. They aren’t really connected to human time at all.

“In electronics and especially synchronous digital circuits, a clock signal (historically also known as logic beat) oscillates between a high and a low state and is used like a metronome to coordinate actions of digital circuits. A clock signal is produced by a clock generator. Although more complex arrangements are used, the most common clock signal is in the form of a square wave with a 50% duty cycle, usually with a fixed, constant frequency.” -- https://en.wikipedia.org/wiki/Clock_signal

Or to put it another way, it's a simple signal that toggles between on and off as quickly (traditionally 100 times per second, i.e. 100 Hz) and as consistently as it can. Modern systems usually have several different clocks, independent of the CPU clock (which is the figure quoted when you see CPU speeds advertised, e.g. 4.5 GHz).

The next important characteristic of hardware clocks to consider is that because they're components which exist in the physical world, they can have impurities, faults, and natural variances which make them imperfect at producing a constant-frequency signal. In the time synchronisation field, this imperfection is called frequency error, and is usually reported in parts-per-million (PPM).

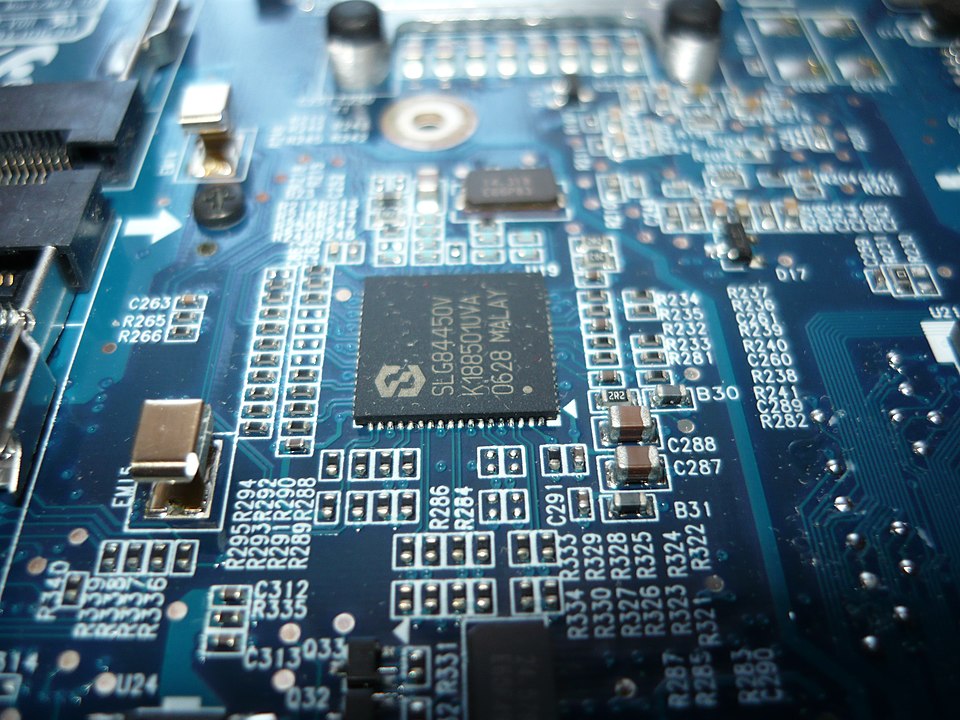

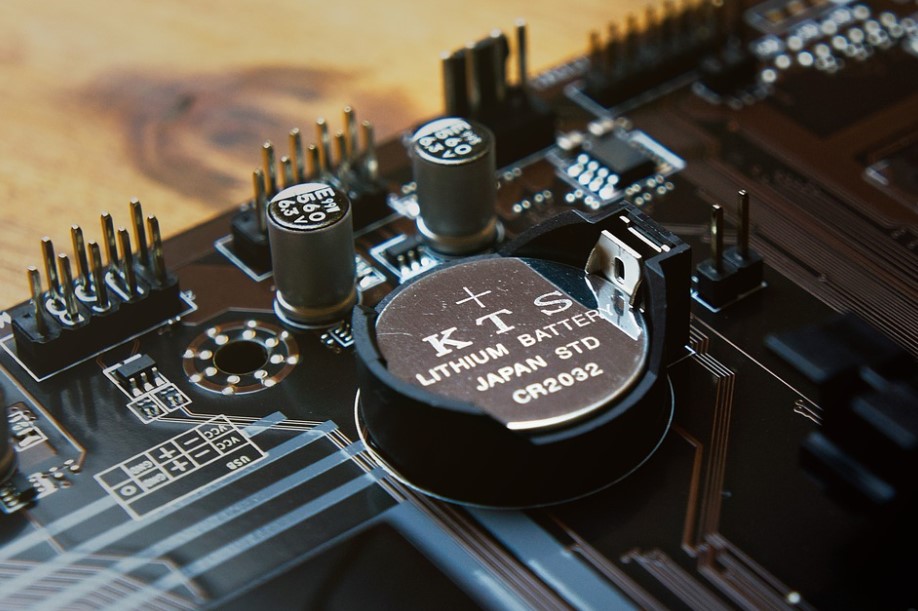

Real Time Clock (RTC)

The RTC (a.k.a. the BIOS or CMOS clock) is another hardware component which tracks the human date and time while your computer is turned off. You usually won't need to deal with it unless you've got a hardware failure, but if you have ever had to go looking for one of these on a motherboard, or turned on an old laptop after it has been off for a while, you've seen the effects of the RTC failing. The RTC is agnostic of time zones - it’s usually set to UTC on Linux systems and local time on Windows systems, and can be set manually from the BIOS setup program or from a running operating system.

Real Time Clocks are not used while the system is running and are generally less precise than hardware clocks. This can sometimes become a significant factor when a system reboots, because at system boot the system clock is initialised from the RTC. (More on this in part 3.)

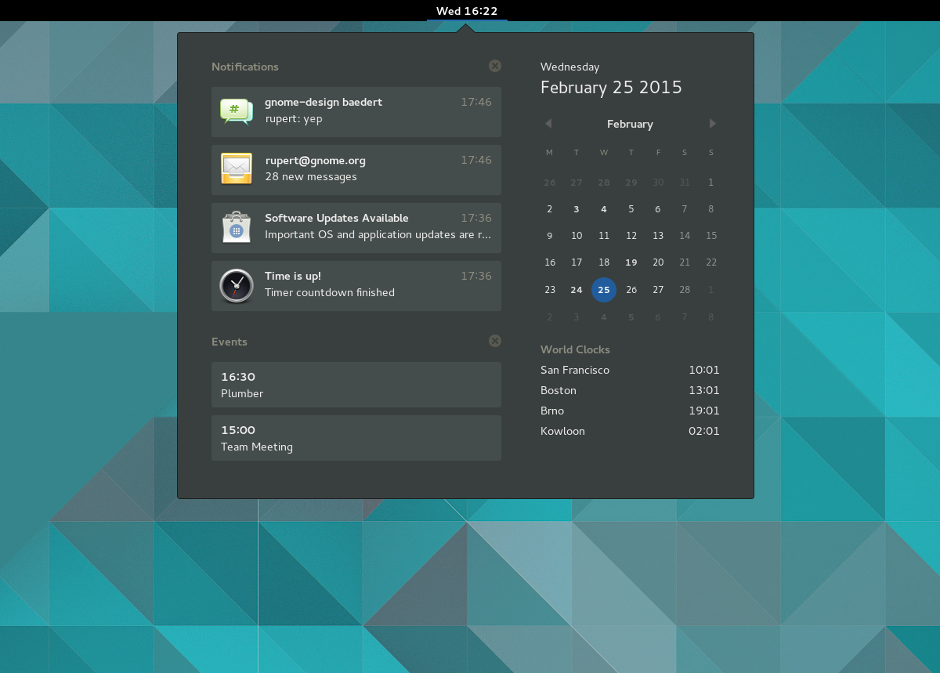

System clock

The system clock is the source of that number we've already encountered above when talking about time zones, the kernel seconds counter. It is a software counter maintained by the kernel which uses measurements from the hardware clocks to provide and track the human- and application-usable time. Whenever you look at the clock on your computer or phone, you're looking at a representation of the system clock.

Clocks in action

Let's take a look at how these clocks appear to us from the perspective of the Linux operating system.

Bare metal

You can see some of the current settings of your system in relation to these clocks with the following Linux commands:

# *** Check the available and in-use hardware clocks

root@work:~# grep . /sys/devices/system/clocksource/clocksource*/[ac]*_clocksource

/sys/devices/system/clocksource/clocksource0/available_clocksource:hpet acpi_pm

/sys/devices/system/clocksource/clocksource0/current_clocksource:hpet

# *** The real time clock is accessed by a rather confusingly named command. 😃

root@work:~# hwclock --show

2024-02-15 11:45:04.905087+10:00

# *** system clock

root@work:~# date +"%F %T.%N%:z"

2024-02-15 11:45:12.169277095+10:00

This output is from my laptop again. Time synchronisation will try to keep all three of these in sync with each other (although the hardware clock can't actually be altered), and with other computers on the network.

Virtual machines

Virtual machines such as AWS EC2 are not allowed to manipulate the RTC, so they are given emulated or read-only access to it and the hardware clock (except on the Xen hypervisor, where the RTC shows nothing). Because VMs run their own separate kernel, there is a separate system clock maintained by each VM individually.

Here's how that looks in practice:

# *** hardware clocks

root@localhost:~# grep . /sys/devices/system/clocksource/clocksource*/[ac]*_clocksource

/sys/devices/system/clocksource/clocksource0/available_clocksource:tsc kvm-clock hpet acpi_pm

/sys/devices/system/clocksource/clocksource0/current_clocksource:tsc

# *** real time clock

root@localhost:~# hwclock --show

2022-12-09 01:26:42.698199+00:00

# *** system clock

root@localhost:~# date +"%F %T.%N%:z"

2022-12-09 01:26:53.083555712+00:00

This output is from a t3.nano instance on AWS. It's not really that much

different from my laptop except for the fact that different hardware clocks are

available, and there's kvm-clock, which is available because we're running

under the KVM hypervisor.

In the early days of VM adoption, there were a lot of problems with hardware clock emulation accuracy, leading a lot of sysadmins to conclude that time sync in VMs was a non-starter. Those problems are a thing of the past, and if you encounter someone telling you differently, feel free to point them in my direction. 😃

Containers

With containers, things are a little bit different. Containers share their host's kernel, so there's no separate system clock. Changing the system clock and reading the RTC are both privileged, so containers cannot access them under normal circumstances. (I have heard that work is underway to namespace the system clock, which would allow containers to have a different system time from their hosts, but I couldn’t lay my hands on any documentation confirming this.)

Here's what this looks like in practice:

# *** hardware clocks

root@container:~# grep . /sys/devices/system/clocksource/clocksource*/[ac]*_clocksource

/sys/devices/system/clocksource/clocksource0/available_clocksource:tsc acpi_pm

/sys/devices/system/clocksource/clocksource0/current_clocksource:tsc

# *** real time clock

root@container:~# hwclock --show

hwclock: Cannot access the Hardware Clock via any known method.

hwclock: Use the --verbose option to see the details of our search for an access method.

# *** system clock

root@container:~# date

Fri Oct 7 03:01:35 UTC 2022

root@container:~# date 09070301

date: cannot set date: Operation not permitted

Wed Sep 7 03:01:00 UTC 2022

root@container:~# date

Fri Oct 7 03:02:00 UTC 2022

This output is from a container on my laptop. When we ask for the RTC, we're

outright refused. When we try to change the system clock we get an error and

the date command displays the time we requested, but next time we ask the

system it's still using the same time it did before.

Time sync standards

There have been various standardised time synchronisation protocols used in computer networks over the past 40 years or so, from the very simple to the highly sophisticated:

- Daytime/Timep (RFC 867/868, 1983) - ask another computer for the time; set the local time to that. These protocols are obsolete, because they don’t take into account the latency between the computers, nor do they attempt to discipline the frequency of the local clock.

- NTP (Network Time Protocol: v1, RFC 958, 1985; v4, RFC 5905, 2010) - periodically poll a set of sources and constantly track their quality and the quality of the local clock, trying to converge on the one true time (UTC).

- SNTP (Simple Network Time Protocol, also RFC 5905) - a cut-down version of NTP which uses periodic rather than constant adjustment, similar to Daytime/Timep. It is commonly used by low-power devices which can't afford to spend compute cycles on constant adjustment.

- PTP (Precision Time Protocol: IEEE 1588, 2002/2008/2019) - periodically broadcast the one true time over a known-good network where you control every hop. PTP is a popular protocol within closed networks with very high precision timing requirements.

NTP is the primary protocol used over the public Internet and will be the focus of our discussions from here on. Version 5 of the protocol is presently being drafted in the IETF working group.

Network Time Protocol (NTP)

NTP runs as a daemon process on Linux and adjusts the system’s perception of

time through small, continuous corrections of the kernel clock, using the

adjtimex(2) system call. It does this

by polling sources such as GPS receivers or atomic clocks, and other computers

running NTP on the Internet. It then uses various mathematical means to

calculate the one true time (UTC) compensating for network delay & congestion,

poor quality clocks, and malicious actors. Once it has worked out the

difference between the system clock and UTC, it calls adjtimex(2) to make

small adjustments to the system clock in the direction of the correct time.

NTP is organised into strata, where the clocks closer to the original time sources are lower strata and those further away are higher strata. Each NTP host increments the stratum counter from its sync source. Sources like GPS are stratum 0, the NTP hosts with GPS receivers are stratum 1, the hosts synced with those NTP hosts are stratum 2, and so on.

NTP uses an all-active architecture (i.e. it doesn't fix on one particular source and fail over only if that source stops responding), and for maximum accuracy it should not be used in conjunction with load-balancing or anycast routing. It configures the kernel to write the system clock to the RTC every 11 minutes.

This point about the one true time is something that we need to keep coming back to, and something that should cause us to think differently about NTP compared with other protocols. It's not like DNS where it's trying to get an answer about the IP address associated with a name, and it doesn't matter where it gets it from, as long as it gets the correct answer. NTP is asking multiple computers for the time, knowing in advance that all of them will be differently, subtly, and inconsistently wrong. NTP is therefore not trying to make your computer's clock match the other ones in your network - it is trying to set it to the right time, based on the best information it has to hand, and (unless you configure it badly) never trusting any one of them completely. Many people don’t realise this and try to make NTP work in ways it wasn’t intended (including me when I first started working with NTP).

Which leads to the next point: NTP uses consensus algorithms in a number of instances (including for orphan mode and leap second indicators), but the main intersection algorithm is not a traditional consensus protocol - it uses ranges rather than discrete values. This means that we shouldn't let experience with failure modes from (for example) database clusters or Raft/Paxos state machines determine our thinking about NTP. In particular, there's no necessity for there to be an odd number of sources, and there's no reason to believe that two equally good clocks is worse than one (two common myths about NTP peer selection).

NTP implementations

There are a few different NTP implementations you'll likely encounter in the wild:

ntpd(the original implementation created by the late David L. Mills of the University of Delaware)chronyd(the default in current versions of Amazon and Ubuntu Linux)- Various SNTP clients (

systemd-timesyncdis one example) - Windows Server 2016+

NTP in action - ntpd

If we have a system running ntpd as a daemon process, we can find out about

our time sources using ntpq, the NTP query program:

$ ntpq -np

remote refid st t when poll reach delay offset jitter

==============================================================================

ntp.lan.gear.sy .POOL. 16 p - 64 0 0.000 +0.000 0.002

ntp.on.net .POOL. 16 p - 64 0 0.000 +0.000 0.002

o127.127.20.0 .PPS. 0 l 1 8 377 0.000 +0.068 0.069

-2403:300:a08:30 .GPSs. 1 u 1 64 377 17.887 +0.205 0.890

-2403:300:a08:40 .GPSs. 1 u 3 64 377 18.742 -0.037 0.484

+2001:44b8:2100: 42.3.115.79 2 u 60 64 377 0.607 +0.269 0.168

+2001:44b8:2100: 42.3.115.79 2 u 47 64 377 1.372 +0.267 0.154

+2001:44b8:2100: .PHC0. 1 u 43 64 377 0.415 +0.371 0.059

+2001:44b8:2100: .PPS. 1 u 20 64 377 0.752 +0.285 0.296

+2001:44b8:2100: 42.3.115.79 2 u 41 64 377 0.624 +0.305 0.132

This output is taken from my BeagleBone time server; it has a GPS receiver which

provides a pulse-per-second (PPS) signal. The command used asks ntpq to show

each peer (p), or time source, and use its numeric (n) address rather than

DNS name. Each line shows a different source that ntpd is using (remote),

which source that source is using (refid), its stratum (st), and various

other characteristics of its communication with that peer. The most interesting

ones normally are delay, which is how many milliseconds it takes to get a

round trip response from that source, and offset, which is the calculated

difference between the source's clock and the local clock (also in

milliseconds). The closer that number is to zero, the better.

Refer to the NTP documentation or my blog

for more info about interpreting this output. You might also want to try the

command ntpq -nc readvar 0, which gives the overall system offset (taking

into account all sources) and the best estimate of maximum error (called root

dispersion).

NTP in action - chronyd

As you might expect, systems running chrony also have a command to view NTP

sources. It is quite similar to ntpq, but with a few subtle differences. The

main ones are that the peer's full address is shown instead of trying to fit

both that and the source's reference clock on the same line (important for those

of us who use IPv6), and the offset and delay are displayed a little

differently:

$ chronyc -n sources

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

#* PPS 0 4 377 10 +16ns[ +19ns] +/- 101ns

#- GPS 0 4 377 10 +9549us[+9549us] +/- 112ms

^- 2001:44b8:2100:3f00::7b:4 1 8 377 178 -179us[ -179us] +/- 490us

^? 2001:44b8:2100:3f11::7b:2 3 8 377 4d +76ms[ +477us] +/- 1759us

^- 2001:44b8:2100:3f00::7b:102 2 8 377 101 -316us[ -316us] +/- 17ms

^? 2001:44b8:2100:3f11::7b:1 0 8 377 - +0ns[ +0ns] +/- 0ns

^- 2001:44b8:2100:3f00::7b:5 2 8 377 81m -17us[ -10us] +/- 22ms

^- 2001:44b8:2100:3f00::7b:7 3 8 377 58m -346us[ -348us] +/- 1632us

^? 2001:44b8:2100:3f11::7b:6 0 8 377 - +0ns[ +0ns] +/- 0ns

^- 2001:44b8:2100:3f11::7b:3 1 8 377 5 +178us[ +178us] +/- 1283us

^- 2620:2d:4000:1::41 2 12 377 39m -3917us[-3929us] +/- 158ms

^- 2403:300:a08:3000::1f2 1 12 377 200 +88us[ +88us] +/- 9746us

This is from my Raspberry Pi time server, which also uses a PPS-capable GPS

source. The last known offset of each source is the number in square brackets,

and the number at the end is half the round trip delay. You can find more on

this in chronyc's documentation. Chrony's

equivalent to ntpq -nc readvar 0 is chronyc -n tracking.

Wait, wasn't this supposed to be something about Mister Cloud?

Thanks for hanging in there! We've reached the end of our whirlwind tour of computer timekeeping. In part 3 we'll apply our newfound awareness of time and knowledge of NTP to a number of different AWS and Azure compute services.

Related posts

- What’s the time, Mister Cloud? An introduction to and experimental comparison of time synchronisation in AWS and Azure, part 1

- What’s the time, Mister Cloud? An introduction to and experimental comparison of time synchronisation in AWS and Azure, part 3

- AWS microsecond-accurate time: a second look

- AWS microsecond-accurate time: a first look

- What's the time, Mister Cloud? The soundtrack