Contents

(Note: Readers who aren't familiar with time sync in public clouds might want to check out my recent series. My previous post about KVM timekeeping is also useful background.)

KVM PHC on AWS

As I mentioned in my first post about the topic, AWS' microsecond-accurate time was first released with quite limited availability: only on r7g instances, and only in the Tokyo (ap-northeast-1) and (more recently) Virginia (us-east-1) regions.

That post showed that there's a big benefit to using the Nitro PTP hardware clock (PHC). As I started to write my previous post about using the PHC on generic KVM guests, I realised this could be applied to many AWS instance types, since they also run KVM. Assuming it worked, it wouldn't need any particular support from AWS, and could be used in any AWS region.

Test process

To test this, I created a new EC2 instance in the ap-southeast-2 (Sydney) region, installed chrony, and enabled the KVM PHC device. (You can find exact details about how to do this for Debian/Ubuntu in my previous post; the process is exactly the same for EC2 instances.)

My instance initially was provisioned as a t3a.micro (2 vCPUs, 1 GB RAM), but I experienced some poor performance with it, which went away when I resized the instance to a c6a.large (2 vCPUs, 4 GB RAM). I put this down to a bad host - these were quite common in the early days of AWS, but I haven't experienced this issue in years.

Because the c6a.large is a little more pricey than the instances I usually test on, I ran it for a shorter time than my usual tests: 10 hours with the PHC device enabled, and the same just using the network-based hypervisor NTP endpoint. This instance type runs on an AMD EPYC 7R13 CPU with a maximum clock rate of 3.6 GHz.

Measurements

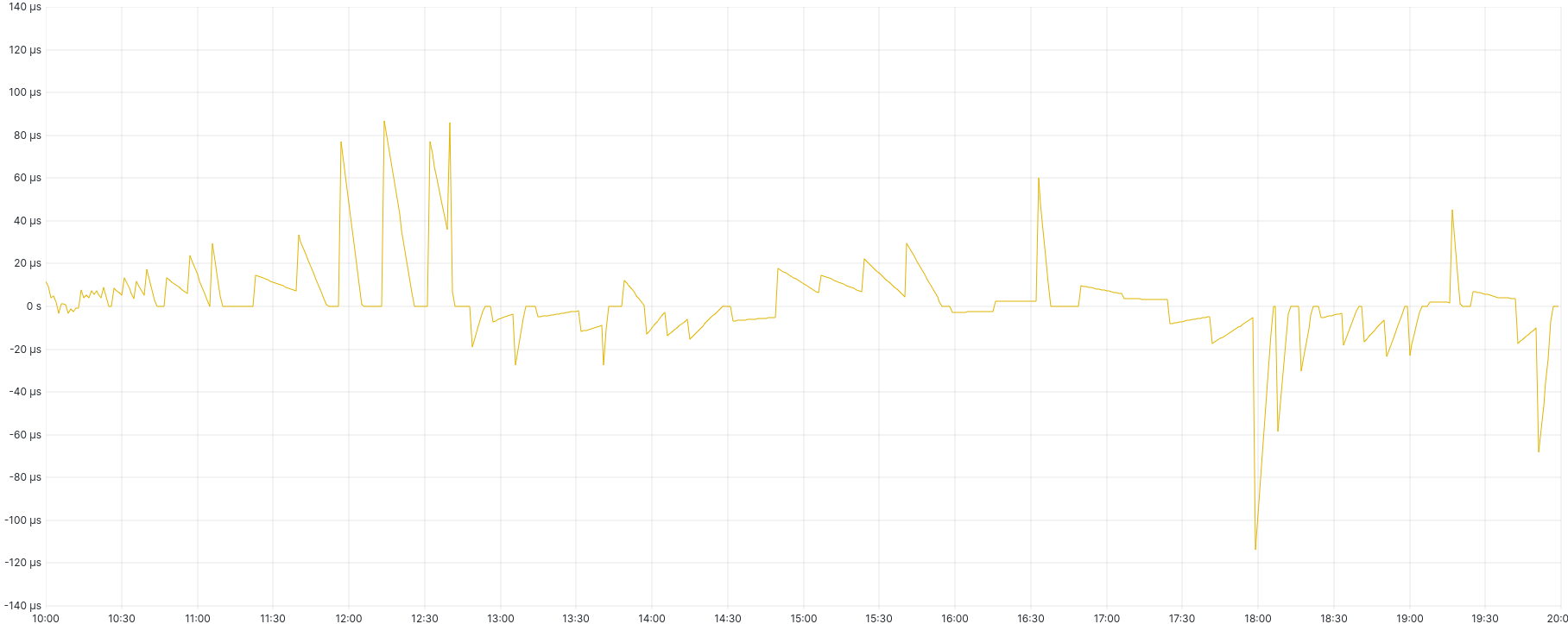

Over the period where the network-based hypervisor NTP endpoint was used, system offset had a range of ± 120 µs:

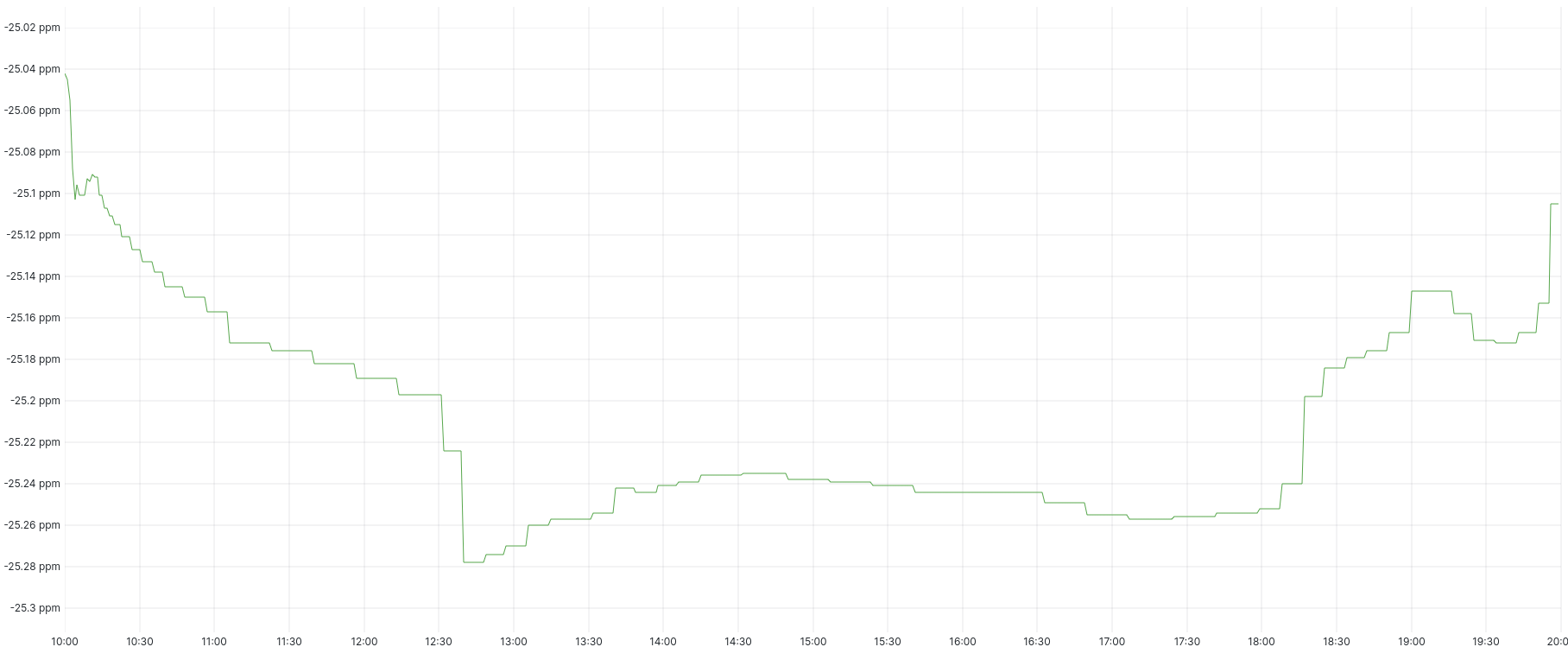

Frequency error was reasonably stable between -25.04 and -25.28 ppm:

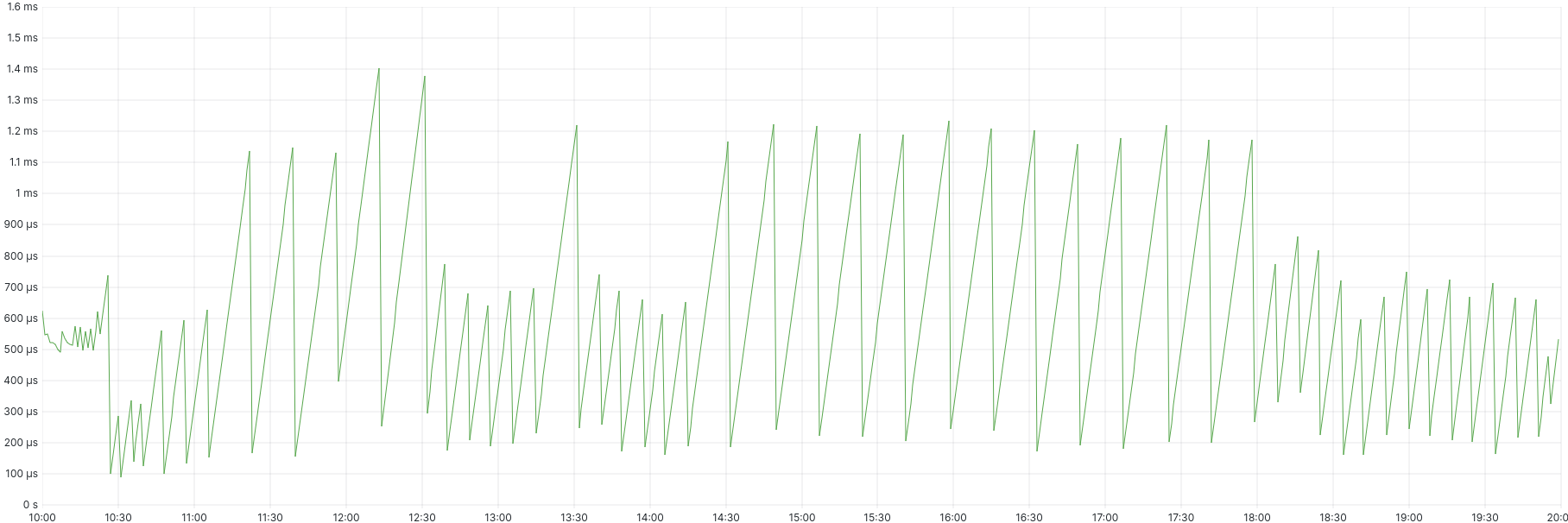

And root dispersion peaked at 1.4 ms:

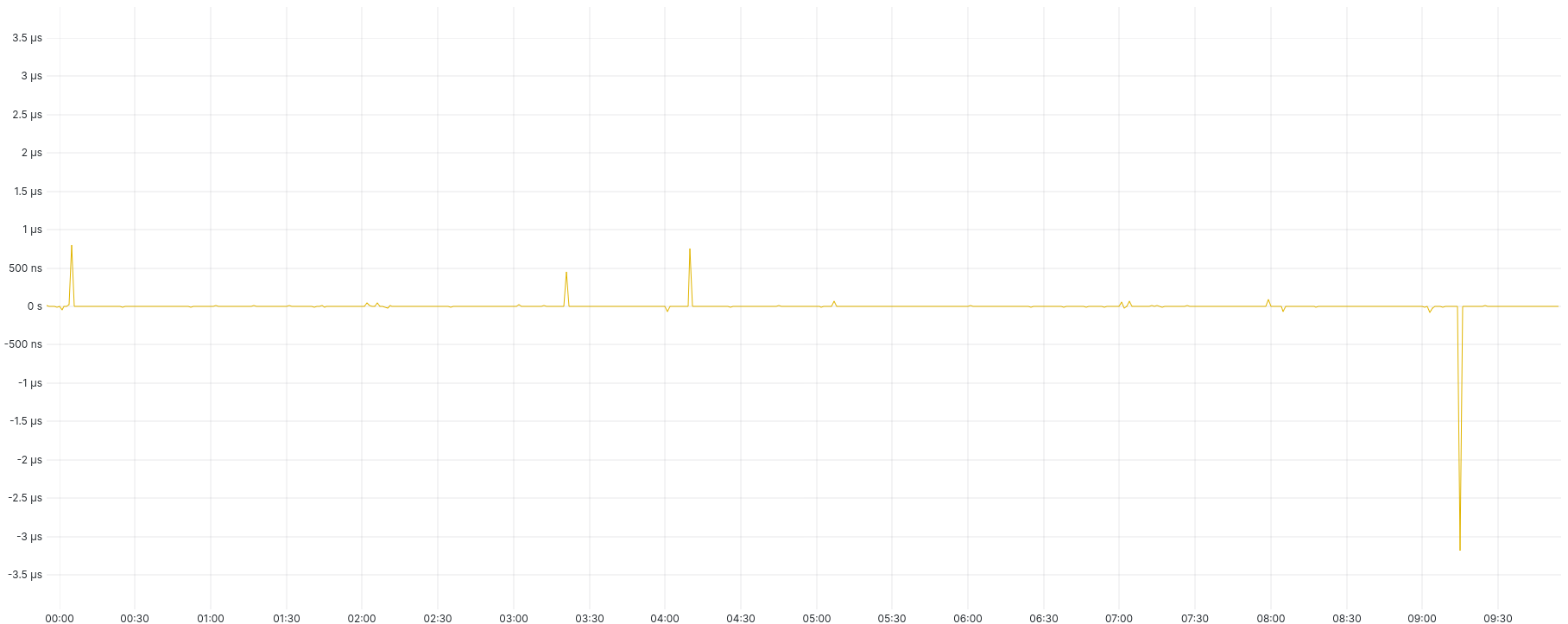

With the PHC device in use, system offset ranged between +1 and -3 µs:

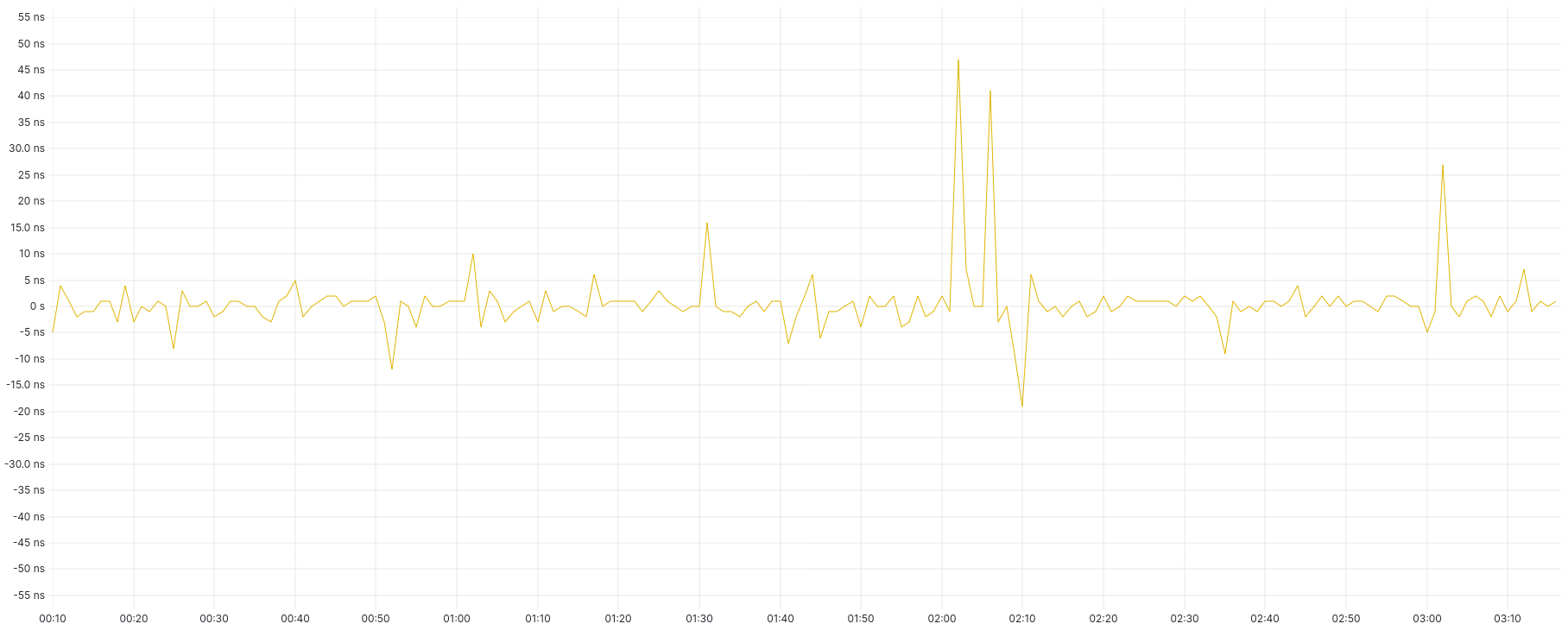

Within this time there were periods of up to 3 hours where it did not exceed ± 50 nanoseconds:

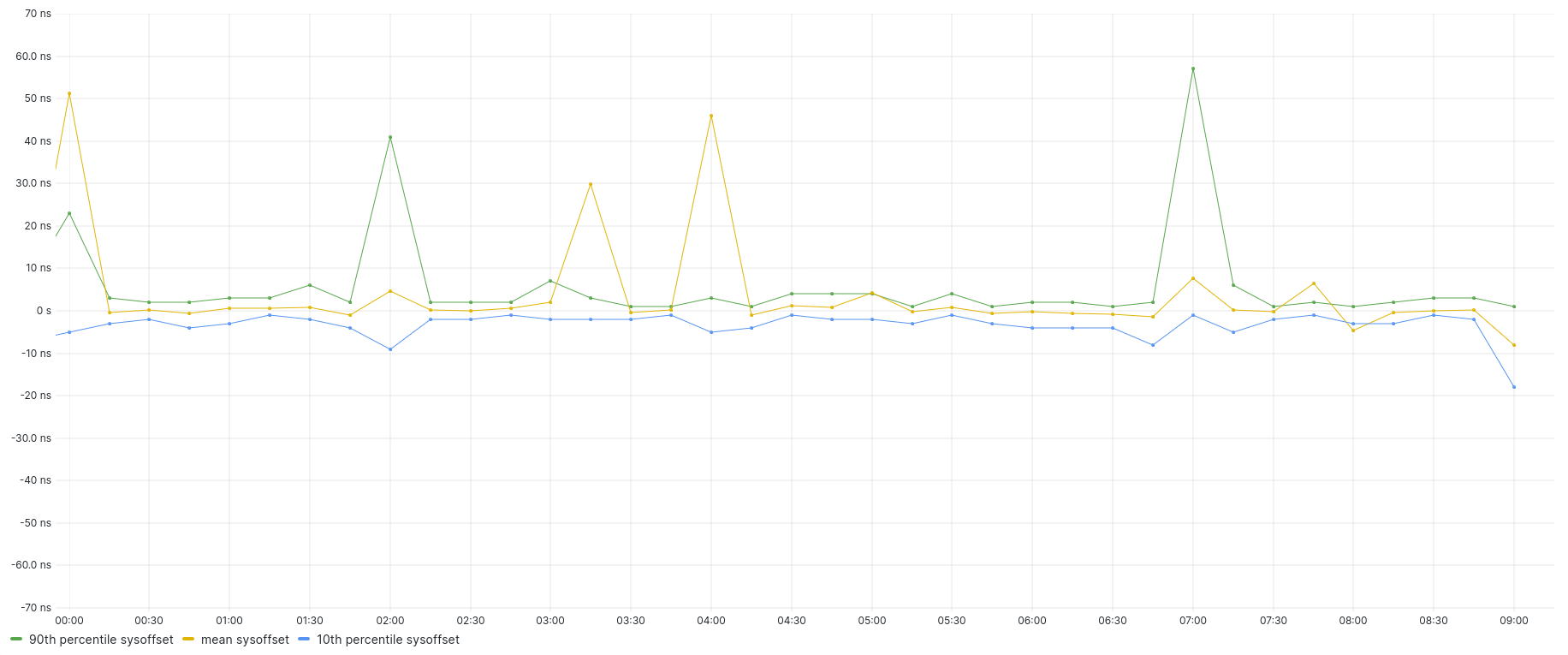

And in fact if we look at 15-minute periods to mitigate the effects of some of those spikes, for a 9-hour period the 10th and 90th percentiles were never outside ± 60 ns (I tried to make these the 5th and 95th percentiles, but I couldn't make it work reliably - the 5th percentile kept disappearing; I obviously need to work on my Grafana skills 😃):

Frequency error was actually more uneven with the PHC enabled, varying between -21.5 and -30 ppm:

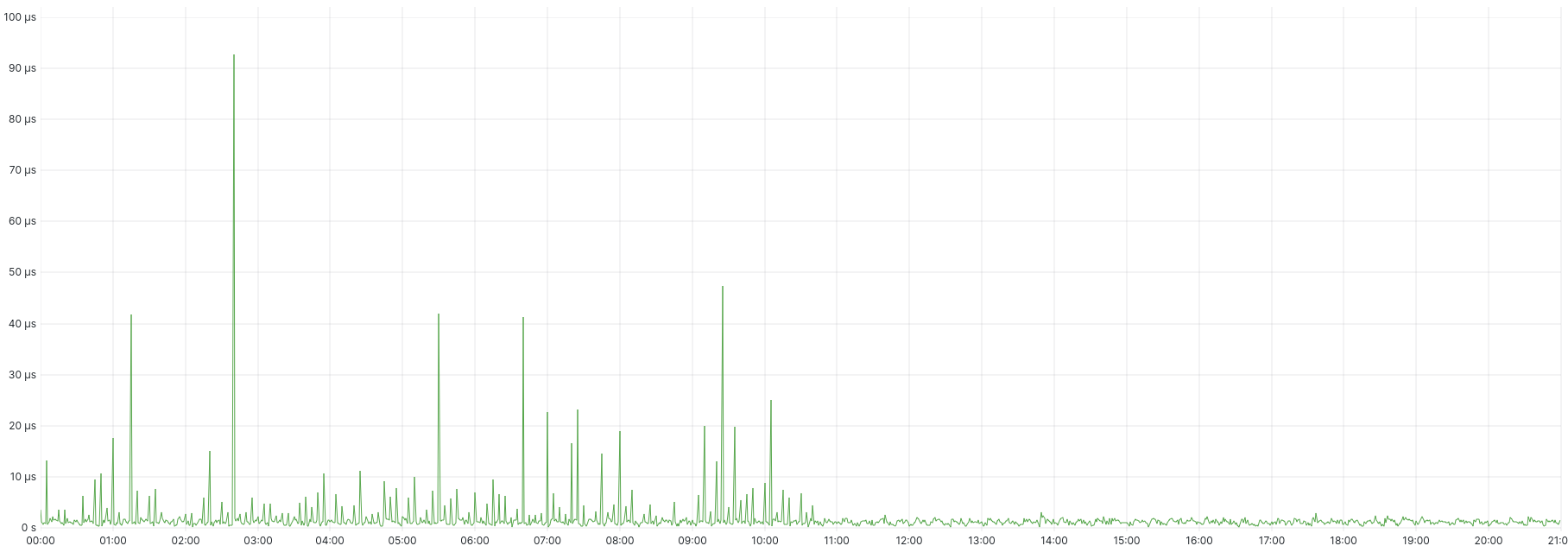

And root dispersion was marred by the large spikes in offset:

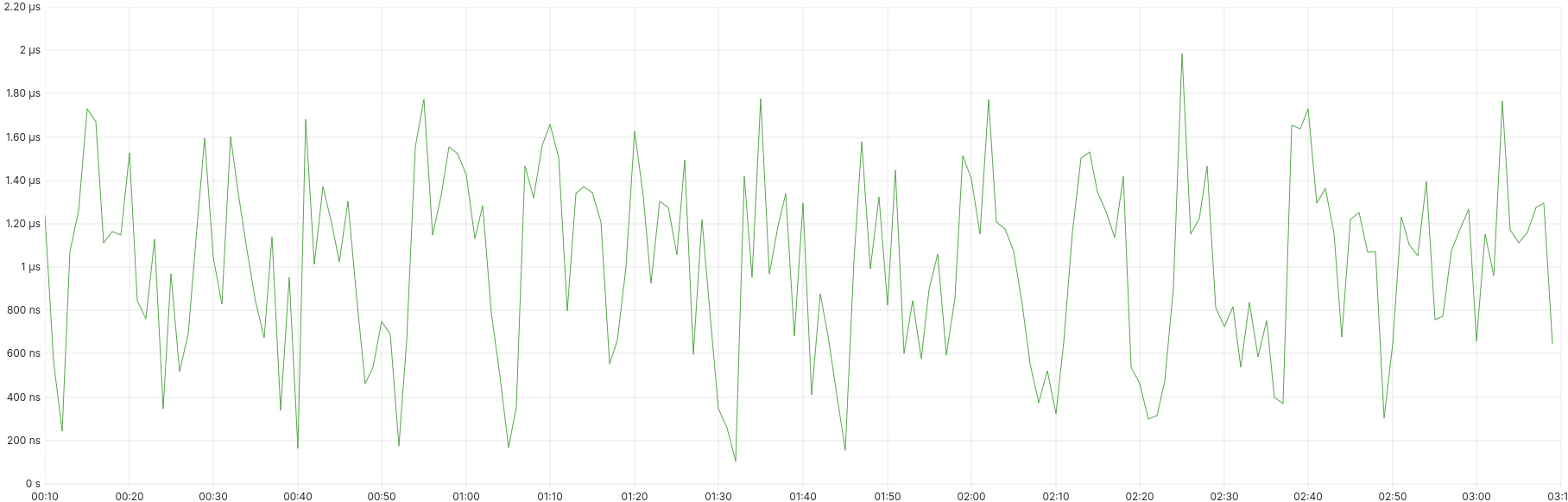

During the periods of very low offset, we had correspondingly low root dispersion, peaking at 2 µs:

Microsecond accurate time for the rest of us

Whilst the spiky nature of the offset during those short periods might be unacceptable for extremely fine-grained timekeeping applications, the simple fact that the average offset needs to be measured in double-digit nanoseconds means that we're in very good shape, and on average the results are quite comparable to those we obtained in Tokyo with the Nitro-based PHC. The KVM PHC also keeps time somewhat better in AWS than on my generic KVM host; I suspect that's due to a combination of higher performance hardware and their extensive tuning to reduce the hypervisor's overhead on customer workloads. Essentially, anyone can get microsecond-accurate time on AWS.

Well, almost anyone... The KVM PHC works only on Intel- and AMD-based instances; on ARM64 (Graviton) it gives an error on loading the kernel module saying that it's unsupported. (I'm not sure whether this is simply an oversight in their KVM builds, or if it's disabled on ARM for some other reason.) Older non-Nitro EC2 instance types based on the Xen hypervisor (like the venerable t2.micro on the free tier) will also not work.

Comparing apples with apples

After I started gathering data for this post, AWS announced support for the Nitro PHC on a large number of latest-generation instance types in us-east-1 (Northern Virginia) and ap-northeast-1 (Tokyo), including some AMD and Intel models, so this gives us the opportunity to directly compare the KVM PHC and the Nitro PHC on otherwise identical instances.

This test used a c7a.large instance, which uses 2 vCPUs and 4 GB RAM on a host with an AMD EPYC 9R14 CPU with a maximum clock speed of 3.7 GHz. Like the previous test of the Nitro PHC, I used Amazon Linux 2023 on an IPv6-only instance. This time I used the us-east-1 (Northern Virginia) region.

The chrony pool configuration was the same:

pool 2.pool.ntp.org iburst # the IPv6-only pool pool fd00:ec2::123 iburst # the AWS hypervisor network endpoint pool ntp.libertysys.com.au iburst # my public NTP servers (in Australia)

I enabled both the ptp_kvm driver and the PHC option on the ena driver. This caused two PTP device files to appear: /dev/ptp0 for the KVM PHC, and /dev/ptp1 for the ENA PHC:

[ec2-user@i-058792d15454f23e5 ~]$ ls -la /dev/ptp* crw-------. 1 root root 245, 0 Apr 30 12:43 /dev/ptp0 crw-------. 1 root root 245, 1 Apr 30 12:43 /dev/ptp1 lrwxrwxrwx. 1 root root 4 Apr 30 12:43 /dev/ptp_kvm -> ptp0

First I tested chrony for about 10 hours using the KVM PHC, by adding this line to the configuration (see my previous post for a full explanation):

refclock PHC /dev/ptp0 poll 0 delay 0.000075 stratum 1

This resulted in system offsets with 5th/95th percentiles mostly in the ± 25 µs range, with spikes up to +60 and -140 µs:

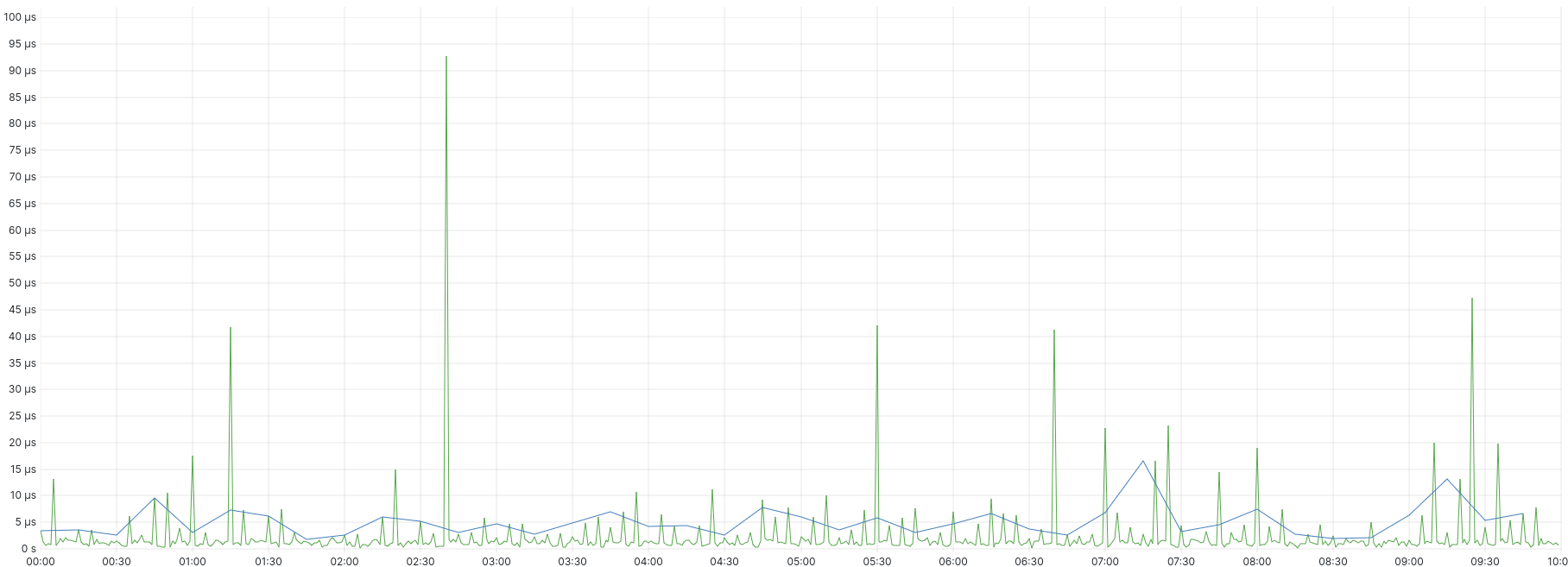

Over the same period, root dispersion had a maximum of approximately 95 µs, with 95th percentile (on 15-minute averages) not more than 20 µs:

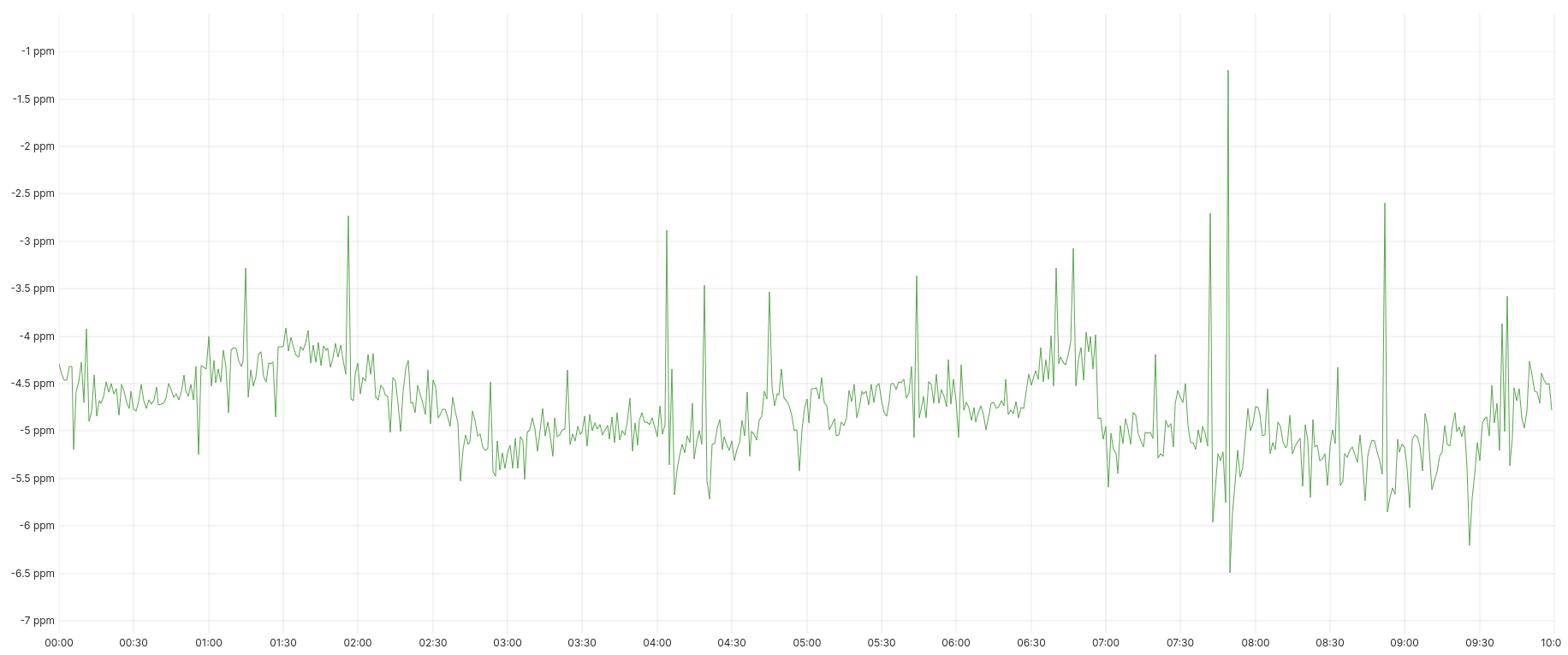

Frequency error ranged between -1 and -6.5 ppm:

Next step was to disable the KVM PHC and enable the ENA PHC by replacing the PHC device file in the chrony configuration:

refclock PHC /dev/ptp1 poll 0 delay 0.000075 stratum 1

The measurements for this period can be seen below. All of our system offset samples fell within single-digit microseconds, with 15-minute 5th/95th percentiles rarely hitting ± 2 µs:

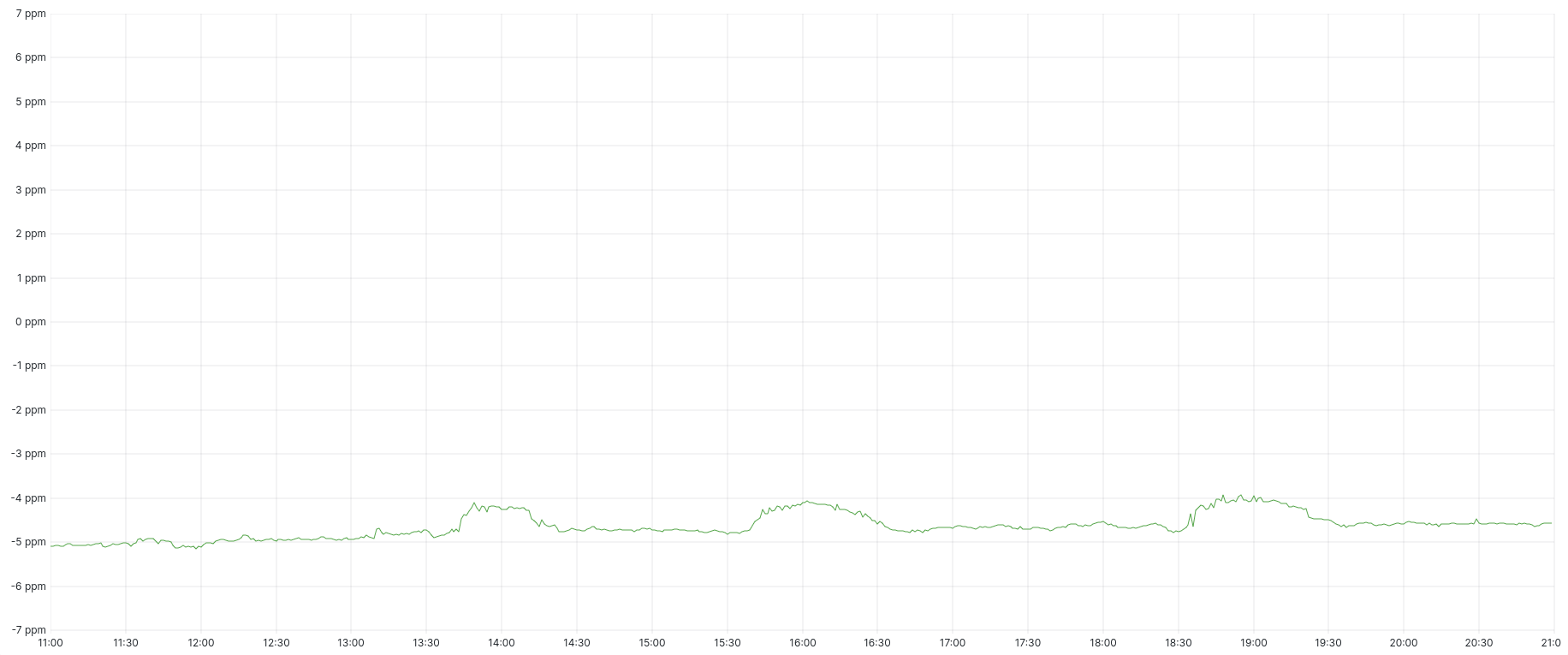

Frequency was more stable than the KVM PHC, ranging between -3.9 and -5.2 ppm, but had some interesting little "hills":

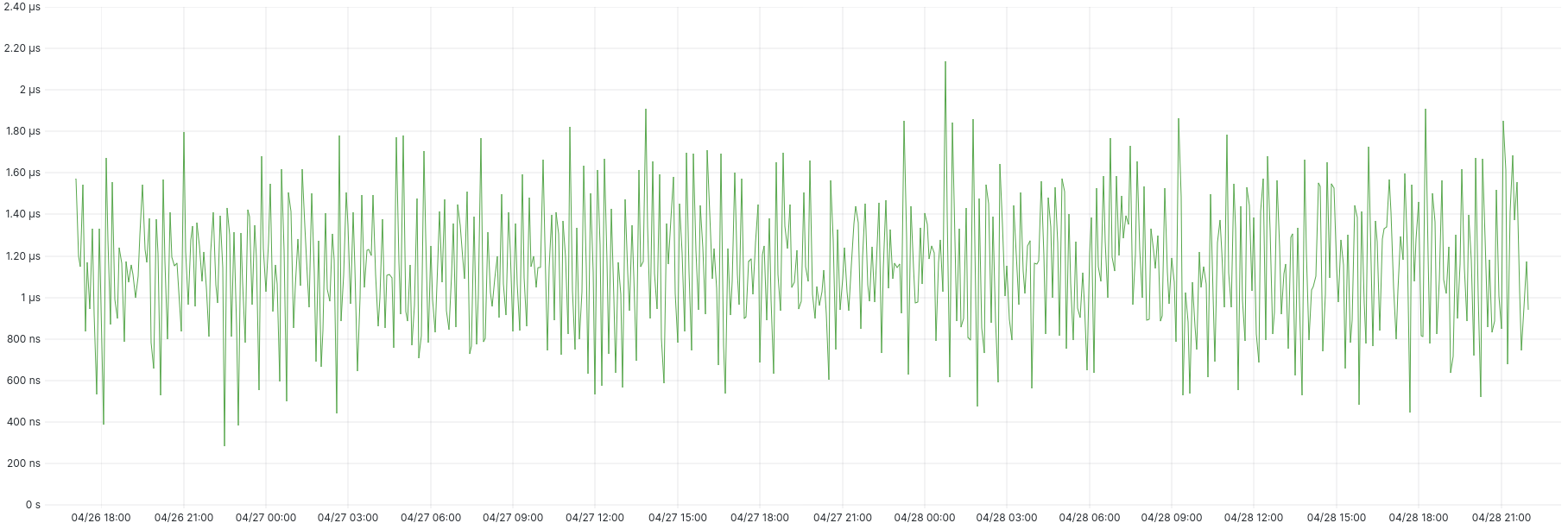

Root dispersion varied between approximately 0.6 and 1.8 µs, with only one sample over 2 µs:

We have a winner

So with AWS' latest iteration in us-east-1, the KVM PHC is mostly able to achieve low double-digit microsecond offsets, whilst the ENA PHC can achieve single-digit microsecond offsets. With the same PHC device configuration in chrony, they both exhibit similar root dispersion characteristics, with the ENA PHC providing a smaller and more stable frequency range. [Edit: The similar root dispersion is not particularly surprising given that we're setting the root delay to a fixed value and they're both low latency local device drivers. Perhaps in a future experiment I'll let them both provide their own root delay and see if it changes anything.]

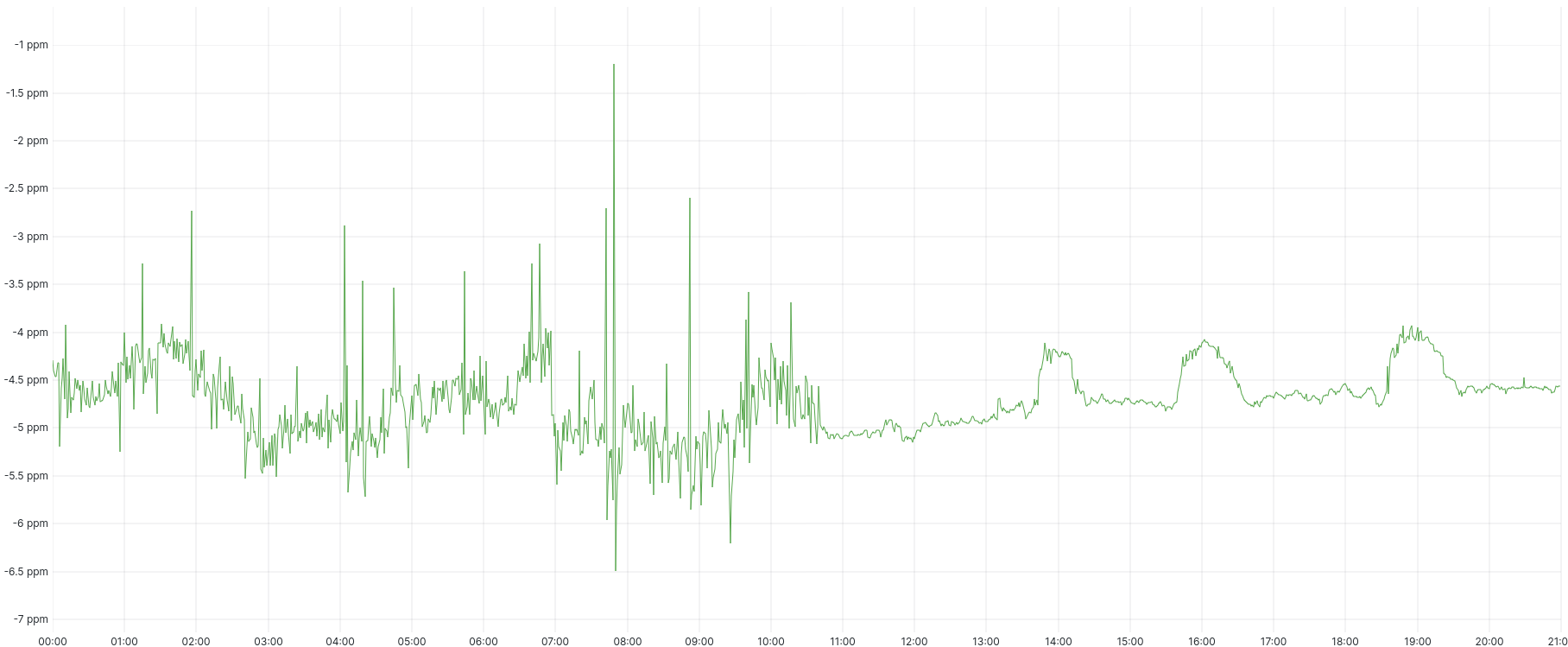

Here are the graphs showing both periods side by side for scale. System offset:

Frequency:

Root dispersion:

Applying some taco seasoning

Then I wondered: what if we take a hint from everyone's favourite fence-sitter? If we use the same refclock device configuration and enable both PHCs, which one will win?

Perhaps unsurprisingly given the above results, this worked out basically no differently to using the ENA PHC on its own. In fact, looking at the tracking log for the period after I enabled both PHCs, chrony did not switch to the KVM PHC as the sync peer even once.

So, two winners, actually

The Nitro system's ENA PHC is clearly the most consistent option when it comes to high-quality time synchronisation in AWS, but using the KVM PHC in places where it isn't available still produces excellent results, and because of its near-ubiquitous availability on AMD- and Intel-based instances in AWS, it should be enabled whenever possible if the ENA PHC is not available.

Related posts

- VM timekeeping: Using the PTP Hardware Clock on KVM

- AWS microsecond-accurate time: a first look

- What’s the time, Mister Cloud? An introduction to and experimental comparison of time synchronisation in AWS and Azure, part 1

- What’s the time, Mister Cloud? An introduction to and experimental comparison of time synchronisation in AWS and Azure, part 2

- What’s the time, Mister Cloud? An introduction to and experimental comparison of time synchronisation in AWS and Azure, part 3